Abhinav Anand

Analysis of Long Range Dependency Understanding in State Space Models

Jan 19, 2026Abstract:Although state-space models (SSMs) have demonstrated strong performance on long-sequence benchmarks, most research has emphasized predictive accuracy rather than interpretability. In this work, we present the first systematic kernel interpretability study of the diagonalized state-space model (S4D) trained on a real-world task (vulnerability detection in source code). Through time and frequency domain analysis of the S4D kernel, we show that the long-range modeling capability of S4D varies significantly under different model architectures, affecting model performance. For instance, we show that the depending on the architecture, S4D kernel can behave as low-pass, band-pass or high-pass filter. The insights from our analysis can guide future work in designing better S4D-based models.

BiGSCoder: State Space Model for Code Understanding

May 02, 2025Abstract:We present BiGSCoder, a novel encoder-only bidirectional state-space model (SSM) featuring a gated architecture, pre-trained for code understanding on a code dataset using masked language modeling. Our work aims to systematically evaluate SSMs' capabilities in coding tasks compared to traditional transformer architectures; BiGSCoder is built for this purpose. Through comprehensive experiments across diverse pre-training configurations and code understanding benchmarks, we demonstrate that BiGSCoder outperforms transformer-based models, despite utilizing simpler pre-training strategies and much less training data. Our results indicate that BiGSCoder can serve as a more sample-efficient alternative to conventional transformer models. Furthermore, our study shows that SSMs perform better without positional embeddings and can effectively extrapolate to longer sequences during fine-tuning.

Integrating Symbolic Execution into the Fine-Tuning of Code-Generating LLMs

Apr 21, 2025

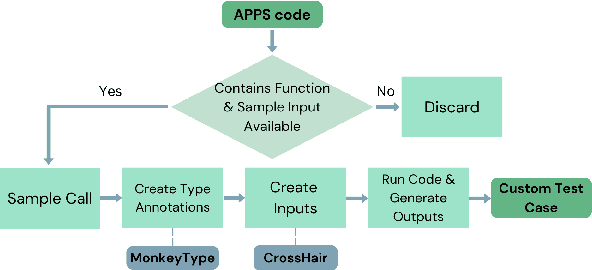

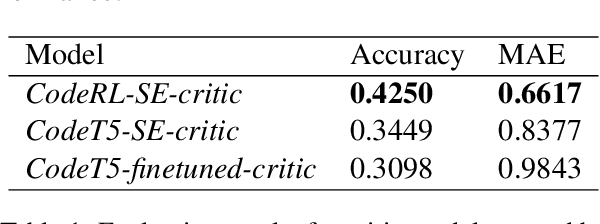

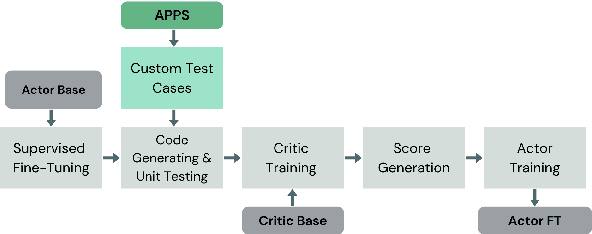

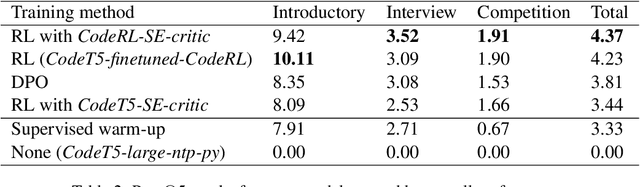

Abstract:Code-generating Large Language Models (LLMs) have become essential tools in modern software development, enhancing productivity and accelerating development. This paper aims to investigate the fine-tuning of code-generating LLMs using Reinforcement Learning and Direct Preference Optimization, further improving their performance. To achieve this, we enhance the training data for the reward model with the help of symbolic execution techniques, ensuring more comprehensive and objective data. With symbolic execution, we create a custom dataset that better captures the nuances in code evaluation. Our reward models, fine-tuned on this dataset, demonstrate significant improvements over the baseline, CodeRL, in estimating the quality of generated code. Our code-generating LLMs, trained with the help of reward model feedback, achieve similar results compared to the CodeRL benchmark.

A Critical Study of What Code-LLMs Learn

Jun 17, 2024Abstract:Large Language Models trained on code corpora (code-LLMs) have demonstrated impressive performance in various coding assistance tasks. However, despite their increased size and training dataset, code-LLMs still have limitations such as suggesting codes with syntactic errors, variable misuse etc. Some studies argue that code-LLMs perform well on coding tasks because they use self-attention and hidden representations to encode relations among input tokens. However, previous works have not studied what code properties are not encoded by code-LLMs. In this paper, we conduct a fine-grained analysis of attention maps and hidden representations of code-LLMs. Our study indicates that code-LLMs only encode relations among specific subsets of input tokens. Specifically, by categorizing input tokens into syntactic tokens and identifiers, we found that models encode relations among syntactic tokens and among identifiers, but they fail to encode relations between syntactic tokens and identifiers. We also found that fine-tuned models encode these relations poorly compared to their pre-trained counterparts. Additionally, larger models with billions of parameters encode significantly less information about code than models with only a few hundred million parameters.

Hybrid ACO-CI Algorithm for Beam Design problems

Mar 29, 2023Abstract:A range of complicated real-world problems have inspired the development of several optimization methods. Here, a novel hybrid version of the Ant colony optimization (ACO) method is developed using the sample space reduction technique of the Cohort Intelligence (CI) Algorithm. The algorithm is developed, and accuracy is tested by solving 35 standard benchmark test functions. Furthermore, the constrained version of the algorithm is used to solve two mechanical design problems involving stepped cantilever beams and I-section beams. The effectiveness of the proposed technique of solution is evaluated relative to contemporary algorithmic approaches that are already in use. The results show that our proposed hybrid ACO-CI algorithm will take lesser number of iterations to produce the desired output which means lesser computational time. For the minimization of weight of stepped cantilever beam and deflection in I-section beam a proposed hybrid ACO-CI algorithm yielded best results when compared to other existing algorithms. The proposed work could be investigate for variegated real world applications encompassing domains of engineering, combinatorial and health care problems.

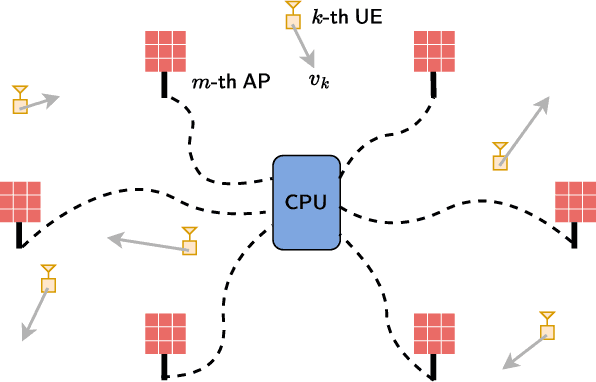

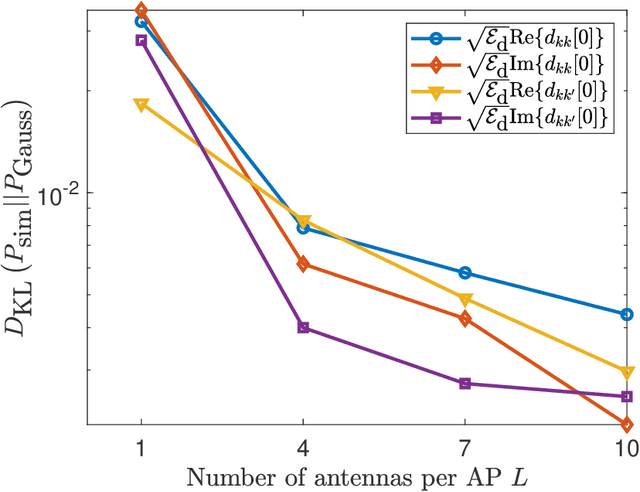

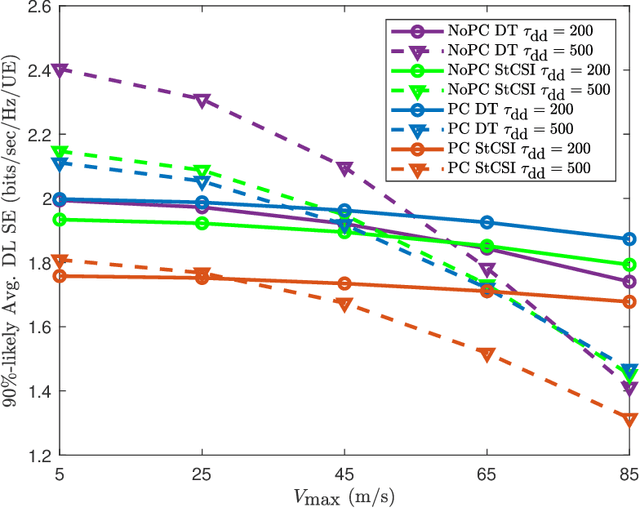

Impact of Mobility on Downlink Cell-Free Massive MIMO Systems

Sep 06, 2022

Abstract:In this paper, we analyze the achievable downlink spectral efficiency of cell-free massive multiple input multiple output (CF-mMIMO) systems, accounting for the effects of channel aging (caused by user mobility) and pilot contamination. We consider two cases, one where user equipments (UEs) rely on downlink pilots beamformed by the access points (APs) to estimate downlink channel, and another where UEs utilize statistical channel state information (CSI) for data decoding. For comparison, we also consider cellular mMIMO and derive its achievable spectral efficiency with channel aging and pilot contamination in the above two cases. Our results show that, in CF-mMIMO, downlink training is preferable over statistical CSI when the length of the data sequence is chosen optimally to maximize the spectral efficiency. In cellular mMIMO, however, either one of the two schemes may be better depending on whether user fairness or sum spectral efficiency is prioritized. Furthermore, the CF-mMIMO system generally outperforms cellular mMIMO even after accounting for the effects of channel aging and pilot contamination. Through numerical results, we illustrate the effect of various system parameters such as the maximum user velocity, uplink/downlink pilot lengths, data duration, network densification, and provide interesting insights into the key differences between cell-free and cellular mMIMO systems.

Quantum compression with classically simulatable circuits

Jul 06, 2022

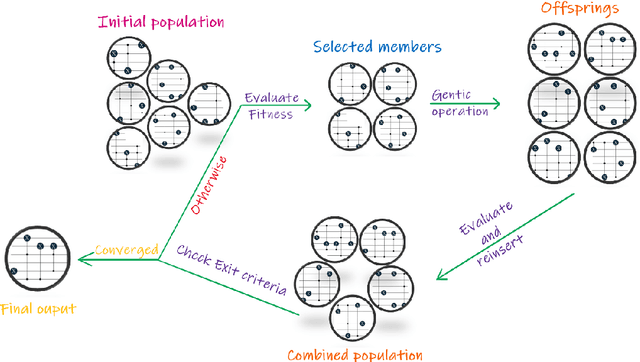

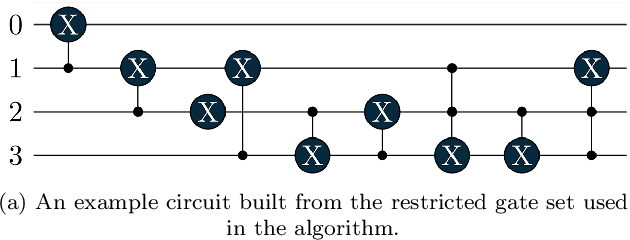

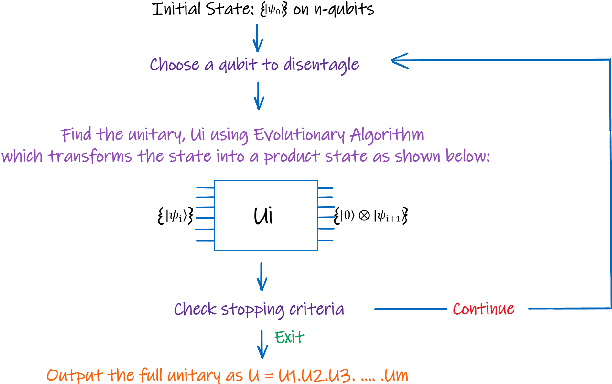

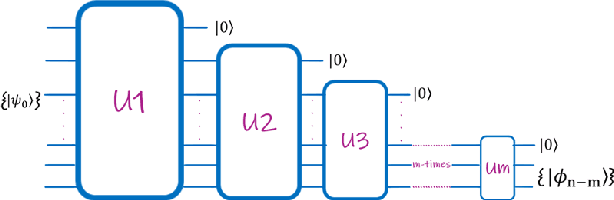

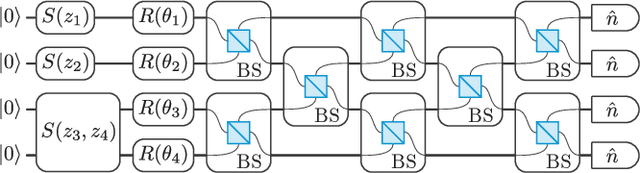

Abstract:As we continue to find applications where the currently available noisy devices exhibit an advantage over their classical counterparts, the efficient use of quantum resources is highly desirable. The notion of quantum autoencoders was proposed as a way for the compression of quantum information to reduce resource requirements. Here, we present a strategy to design quantum autoencoders using evolutionary algorithms for transforming quantum information into lower-dimensional representations. We successfully demonstrate the initial applications of the algorithm for compressing different families of quantum states. In particular, we point out that using a restricted gate set in the algorithm allows for efficient simulation of the generated circuits. This approach opens the possibility of using classical logic to find low representations of quantum data, using fewer computational resources.

Impact of Subcarrier Allocation and User Mobility on the Uplink Performance of Massive MIMO-OFDMA Systems

Aug 12, 2021

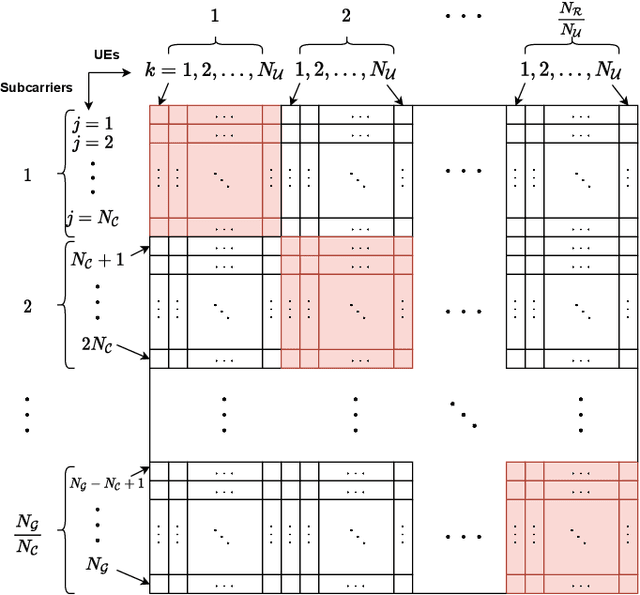

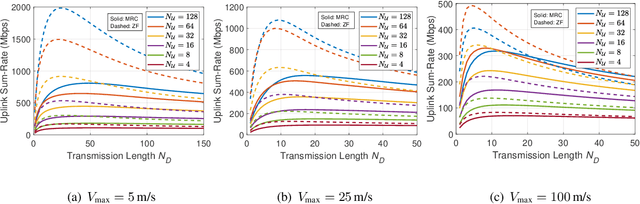

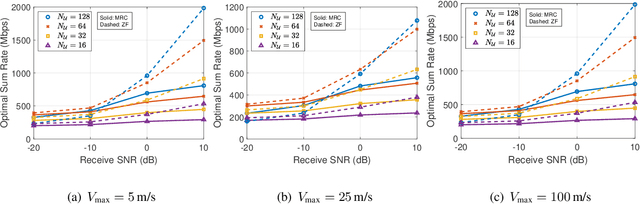

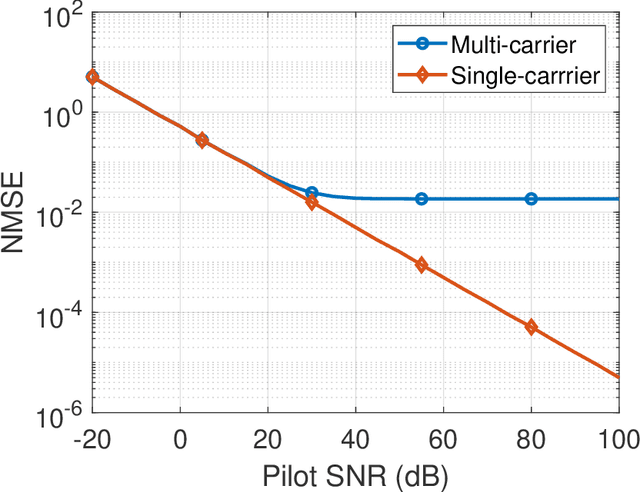

Abstract:This paper considers the uplink performance of a massive multiple-input multiple-output orthogonal frequency-division multiple access (MIMO-OFDMA) system with mobile users. Mobility brings two major problems to a MIMO-OFDMA system: inter carrier interference (ICI) and channel aging. In practice, it is common to allot multiple contiguous subcarriers to a user as well as schedule multiple users on each subcarrier. Motivated by this, we consider a general subcarrier allocation scheme and derive expressions for the ICI power, uplink signal to interference plus noise ratio and the achievable uplink sum-rate. We show that the system incurs a near-constant ICI power that depends linearly on the ratio of the number of users to the number of subcarriers employed, irrespective of how UEs distribute their power across the subcarriers. Further, we exploit the coherence bandwidth of the channel to reduce the length of the pilot sequences required for uplink channel estimation. We consider both zero-forcing processing and maximal ratio combining at the receiver and compare the respective sum-rate performances. In either case, the proposed subcarrier allocation scheme leads to significantly higher sum-rates compared to previous work, owing to the constant ICI as well as the reduced pilot overhead.

Noisy intermediate-scale quantum (NISQ) algorithms

Jan 21, 2021

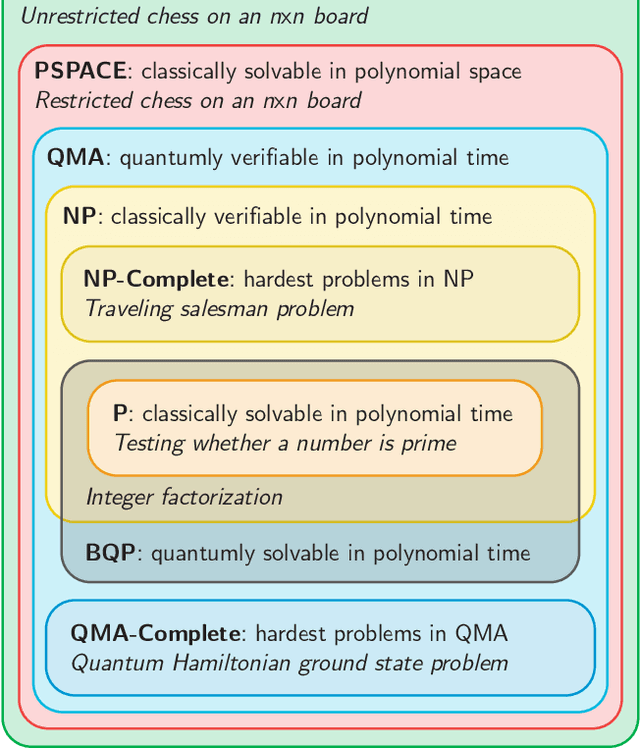

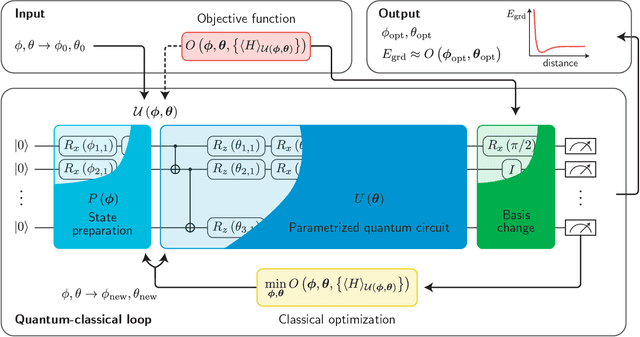

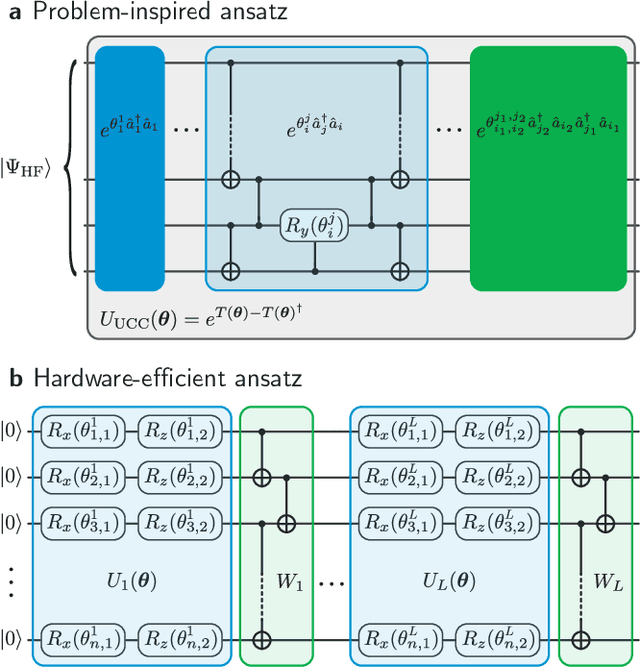

Abstract:A universal fault-tolerant quantum computer that can solve efficiently problems such as integer factorization and unstructured database search requires millions of qubits with low error rates and long coherence times. While the experimental advancement towards realizing such devices will potentially take decades of research, noisy intermediate-scale quantum (NISQ) computers already exist. These computers are composed of hundreds of noisy qubits, i.e. qubits that are not error-corrected, and therefore perform imperfect operations in a limited coherence time. In the search for quantum advantage with these devices, algorithms have been proposed for applications in various disciplines spanning physics, machine learning, quantum chemistry and combinatorial optimization. The goal of such algorithms is to leverage the limited available resources to perform classically challenging tasks. In this review, we provide a thorough summary of NISQ computational paradigms and algorithms. We discuss the key structure of these algorithms, their limitations, and advantages. We additionally provide a comprehensive overview of various benchmarking and software tools useful for programming and testing NISQ devices.

Natural Evolutionary Strategies for Variational Quantum Computation

Nov 30, 2020

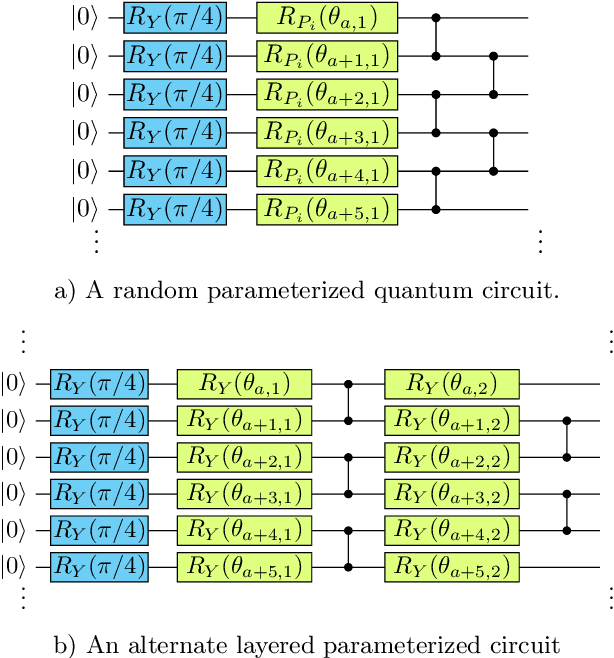

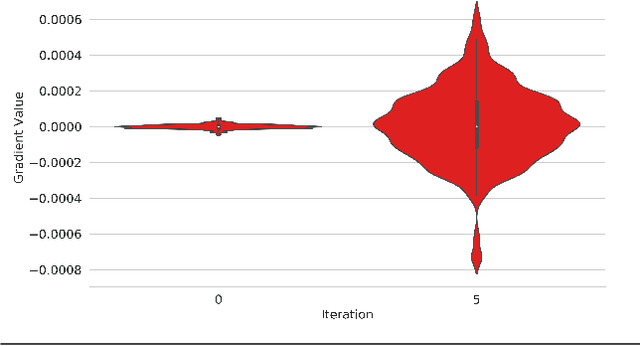

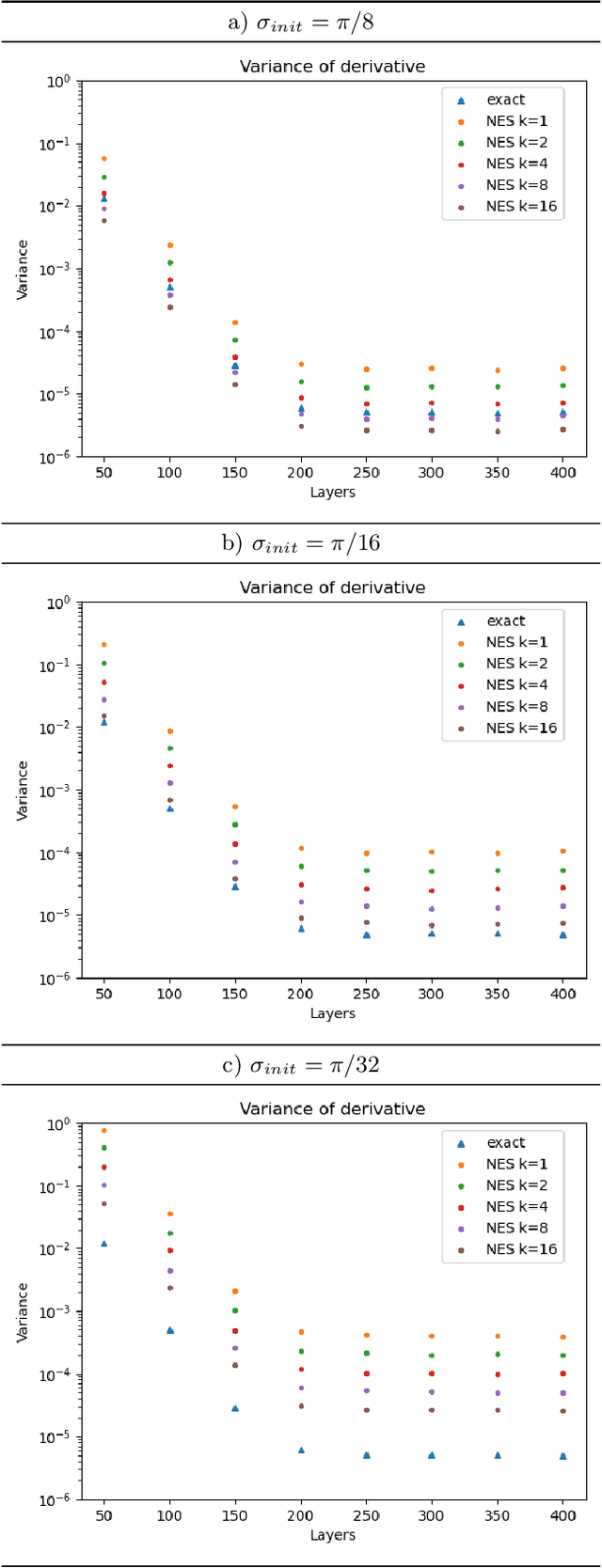

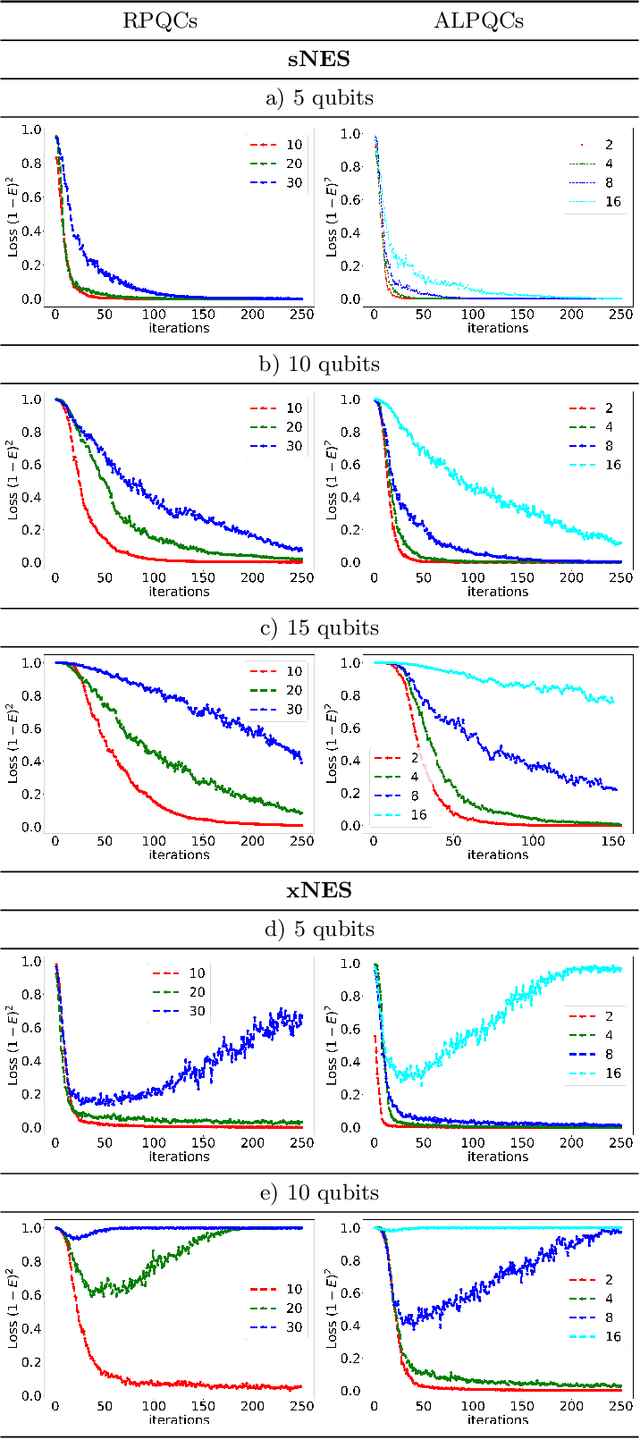

Abstract:Natural evolutionary strategies (NES) are a family of gradient-free black-box optimization algorithms. This study illustrates their use for the optimization of randomly-initialized parametrized quantum circuits (PQCs) in the region of vanishing gradients. We show that using the NES gradient estimator the exponential decrease in variance can be alleviated. We implement two specific approaches, the exponential and separable natural evolutionary strategies, for parameter optimization of PQCs and compare them against standard gradient descent. We apply them to two different problems of ground state energy estimation using variational quantum eigensolver (VQE) and state preparation with circuits of varying depth and length. We also introduce batch optimization for circuits with larger depth to extend the use of evolutionary strategies to a larger number of parameters. We achieve accuracy comparable to state-of-the-art optimization techniques in all the above cases with a lower number of circuit evaluations. Our empirical results indicate that one can use NES as a hybrid tool in tandem with other gradient-based methods for optimization of deep quantum circuits in regions with vanishing gradients.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge