Abhijit Sharang

Driver Identification Using Automobile Sensor Data from a Single Turn

Jun 09, 2017

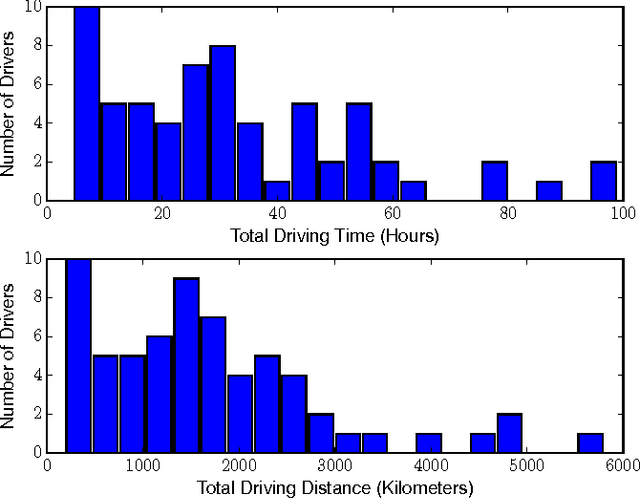

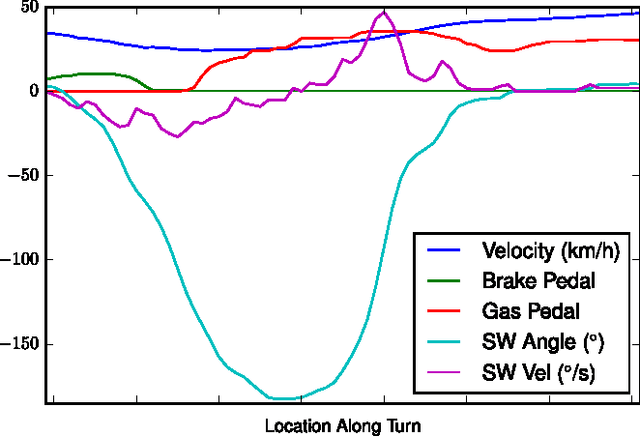

Abstract:As automotive electronics continue to advance, cars are becoming more and more reliant on sensors to perform everyday driving operations. These sensors are omnipresent and help the car navigate, reduce accidents, and provide comfortable rides. However, they can also be used to learn about the drivers themselves. In this paper, we propose a method to predict, from sensor data collected at a single turn, the identity of a driver out of a given set of individuals. We cast the problem in terms of time series classification, where our dataset contains sensor readings at one turn, repeated several times by multiple drivers. We build a classifier to find unique patterns in each individual's driving style, which are visible in the data even on such a short road segment. To test our approach, we analyze a new dataset collected by AUDI AG and Audi Electronics Venture, where a fleet of test vehicles was equipped with automotive data loggers storing all sensor readings on real roads. We show that turns are particularly well-suited for detecting variations across drivers, especially when compared to straightaways. We then focus on the 12 most frequently made turns in the dataset, which include rural, urban, highway on-ramps, and more, obtaining accurate identification results and learning useful insights about driver behavior in a variety of settings.

Recurrent and Contextual Models for Visual Question Answering

Mar 23, 2017

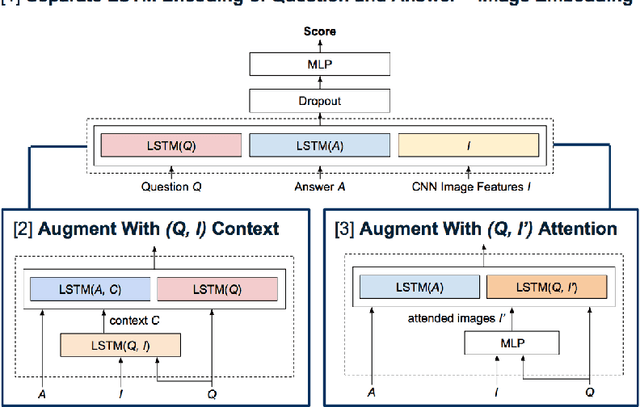

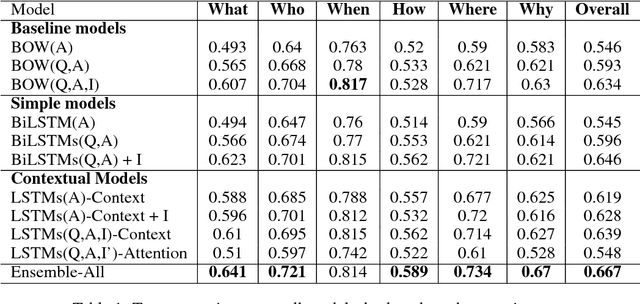

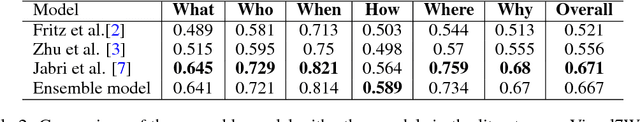

Abstract:We propose a series of recurrent and contextual neural network models for multiple choice visual question answering on the Visual7W dataset. Motivated by divergent trends in model complexities in the literature, we explore the balance between model expressiveness and simplicity by studying incrementally more complex architectures. We start with LSTM-encoding of input questions and answers; build on this with context generation by LSTM-encodings of neural image and question representations and attention over images; and evaluate the diversity and predictive power of our models and the ensemble thereof. All models are evaluated against a simple baseline inspired by the current state-of-the-art, consisting of involving simple concatenation of bag-of-words and CNN representations for the text and images, respectively. Generally, we observe marked variation in image-reasoning performance between our models not obvious from their overall performance, as well as evidence of dataset bias. Our standalone models achieve accuracies up to $64.6\%$, while the ensemble of all models achieves the best accuracy of $66.67\%$, within $0.5\%$ of the current state-of-the-art for Visual7W.

Using machine learning for medium frequency derivative portfolio trading

Dec 19, 2015

Abstract:We use machine learning for designing a medium frequency trading strategy for a portfolio of 5 year and 10 year US Treasury note futures. We formulate this as a classification problem where we predict the weekly direction of movement of the portfolio using features extracted from a deep belief network trained on technical indicators of the portfolio constituents. The experimentation shows that the resulting pipeline is effective in making a profitable trade.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge