Abeer Alessa

Cognitive Bias in High-Stakes Decision-Making with LLMs

Feb 25, 2024

Abstract:Large language models (LLMs) offer significant potential as tools to support an expanding range of decision-making tasks. However, given their training on human (created) data, LLMs can inherit both societal biases against protected groups, as well as be subject to cognitive bias. Such human-like bias can impede fair and explainable decisions made with LLM assistance. Our work introduces BiasBuster, a framework designed to uncover, evaluate, and mitigate cognitive bias in LLMs, particularly in high-stakes decision-making tasks. Inspired by prior research in psychology and cognitive sciences, we develop a dataset containing 16,800 prompts to evaluate different cognitive biases (e.g., prompt-induced, sequential, inherent). We test various bias mitigation strategies, amidst proposing a novel method using LLMs to debias their own prompts. Our analysis provides a comprehensive picture on the presence and effects of cognitive bias across different commercial and open-source models. We demonstrate that our self-help debiasing effectively mitigate cognitive bias without having to manually craft examples for each bias type.

Towards Designing a ChatGPT Conversational Companion for Elderly People

Apr 18, 2023

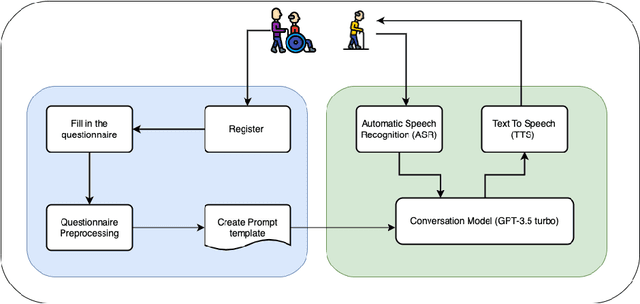

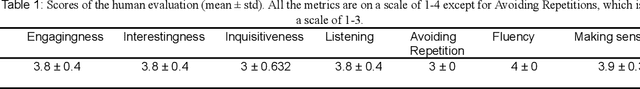

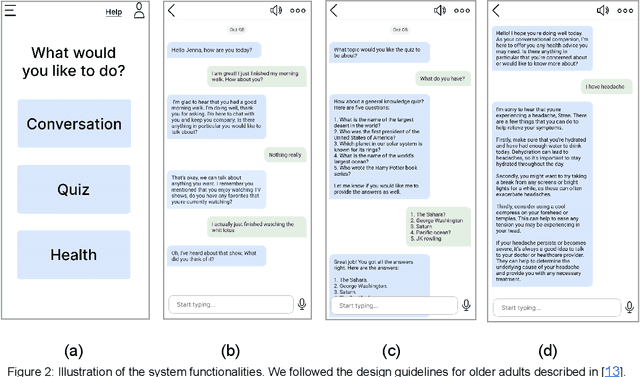

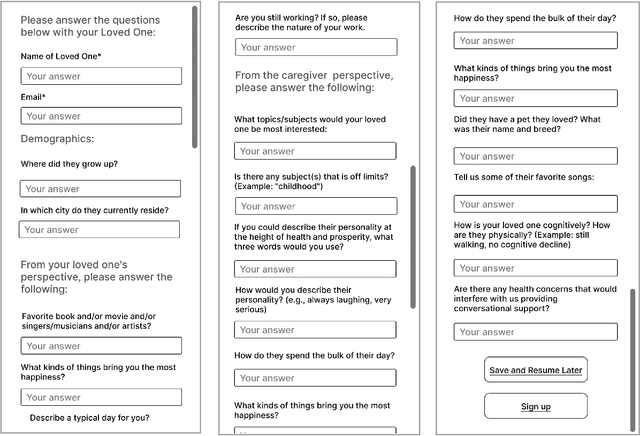

Abstract:Loneliness and social isolation are serious and widespread problems among older people, affecting their physical and mental health, quality of life, and longevity. In this paper, we propose a ChatGPT-based conversational companion system for elderly people. The system is designed to provide companionship and help reduce feelings of loneliness and social isolation. The system was evaluated with a preliminary study. The results showed that the system was able to generate responses that were relevant to the created elderly personas. However, it is essential to acknowledge the limitations of ChatGPT, such as potential biases and misinformation, and to consider the ethical implications of using AI-based companionship for the elderly, including privacy concerns.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge