What Would You Do? Acting by Learning to Predict

Paper and Code

Mar 08, 2017

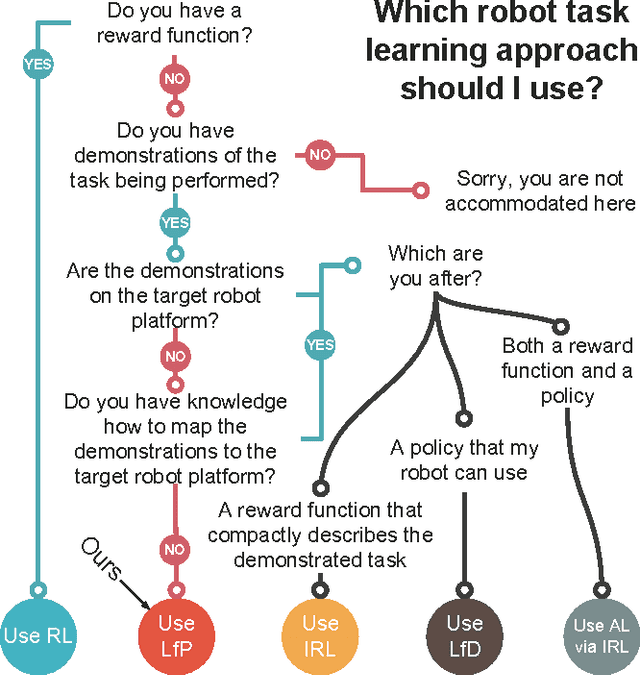

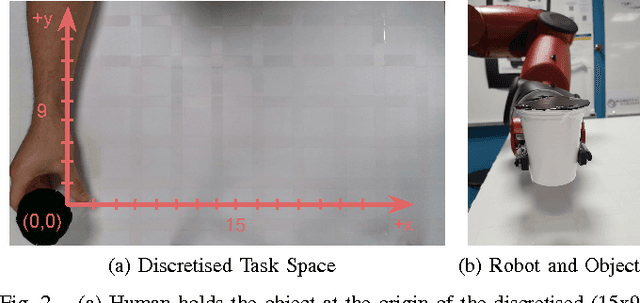

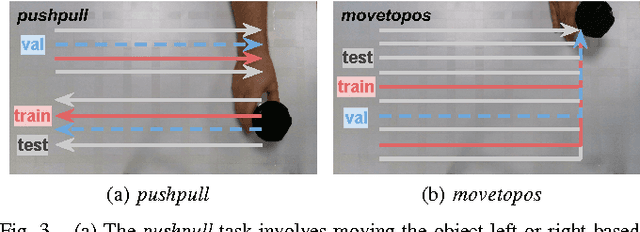

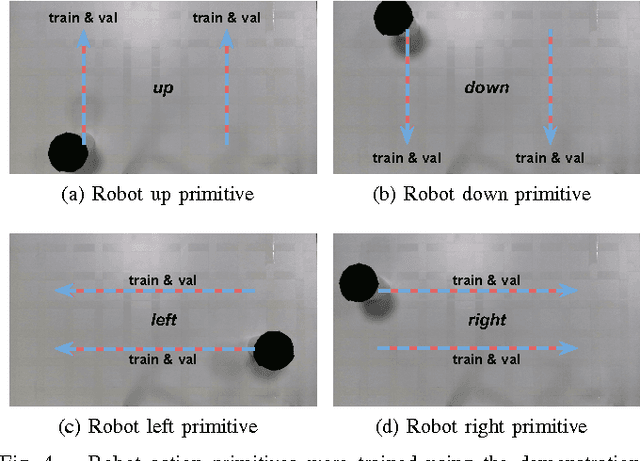

We propose to learn tasks directly from visual demonstrations by learning to predict the outcome of human and robot actions on an environment. We enable a robot to physically perform a human demonstrated task without knowledge of the thought processes or actions of the human, only their visually observable state transitions. We evaluate our approach on two table-top, object manipulation tasks and demonstrate generalisation to previously unseen states. Our approach reduces the priors required to implement a robot task learning system compared with the existing approaches of Learning from Demonstration, Reinforcement Learning and Inverse Reinforcement Learning.

* Submitted to International Conference on Intelligent Robots and

Systems (IROS 2017)

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge