Weight-dependent Gates for Network Pruning

Paper and Code

Jul 04, 2020

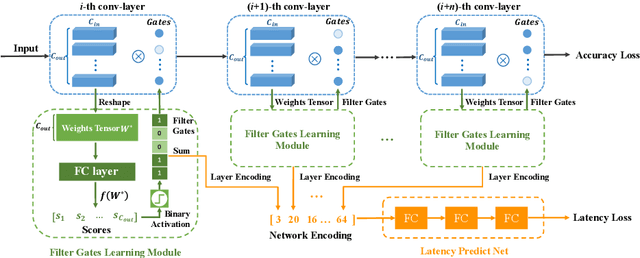

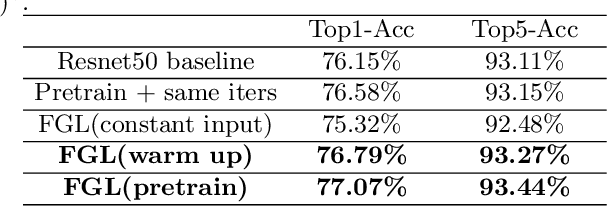

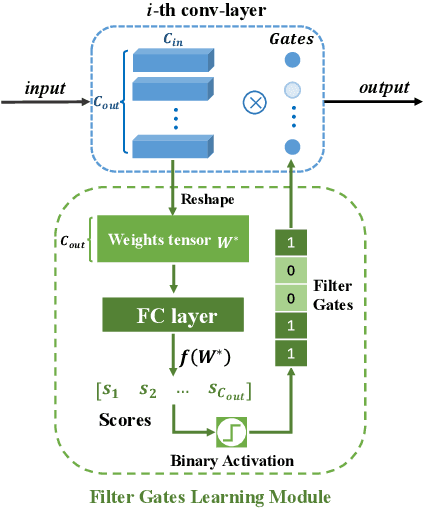

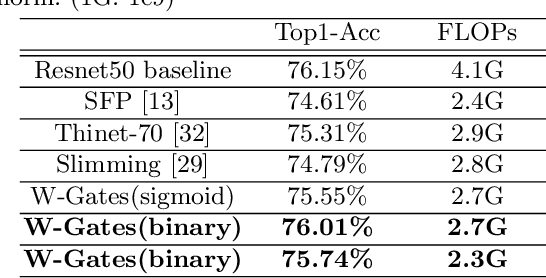

In this paper, we propose a simple and effective network pruning framework, which introduces novel weight-dependent gates (W-Gates) to prune filter adaptively. We argue that the pruning decision should depend on the convolutional weights, in other words, it should be a learnable function of filter weights. We thus construct the Filter Gates Learning Module (FGL) to learn the information from convolutional weights and obtain binary W-Gates to prune or keep the filters automatically. To prune the network under hardware constraint, we train a Latency Predict Net (LPNet) to estimate the hardware latency of candidate pruned networks. Based on the proposed LPNet, we can optimize W-Gates and the pruning ratio of each layer under latency constraint. The whole framework is differentiable and can be optimized by gradient-based method to achieve a compact network with better trade-off between accuracy and efficiency. We have demonstrated the effectiveness of our method on Resnet34, Resnet50 and MobileNet V2, achieving up to 1.33/1.28/1.1 higher Top-1 accuracy with lower hardware latency on ImageNet. Compared with state-of-the-art pruning methods, our method achieves superior performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge