Web-based Elicitation of Human Perception on mixup Data

Paper and Code

Nov 02, 2022

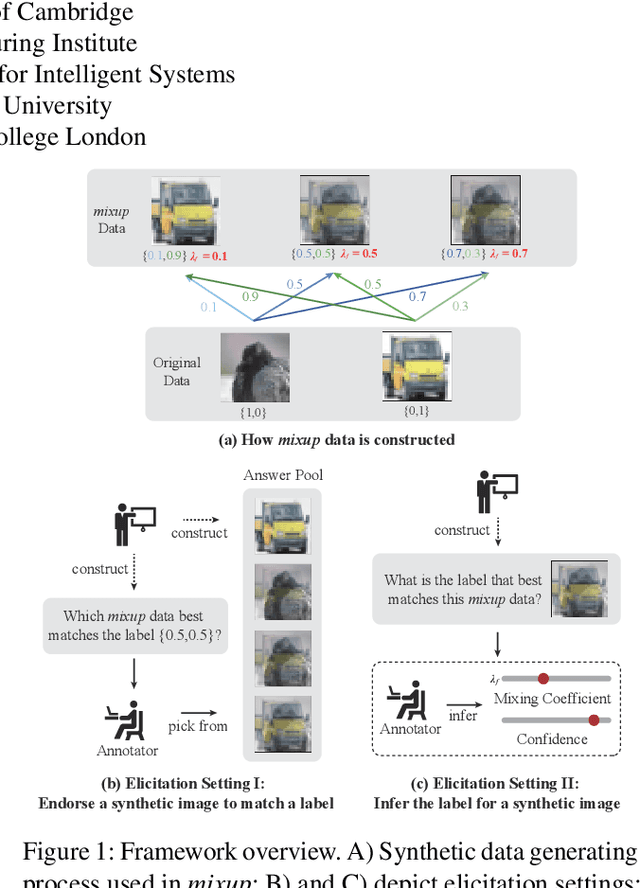

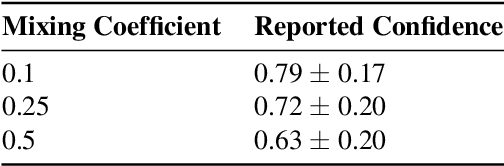

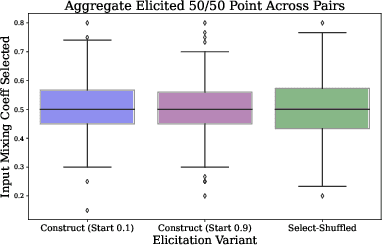

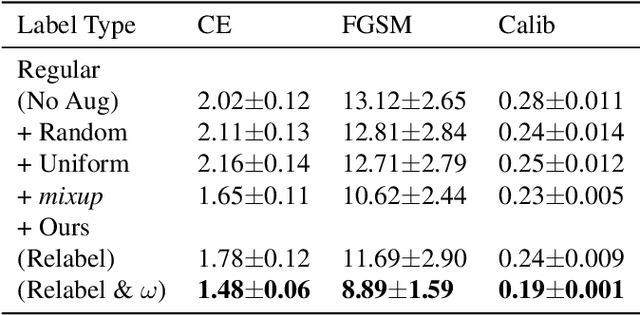

Synthetic data is proliferating on the web and powering many advances in machine learning. However, it is not always clear if synthetic labels are perceptually sensible to humans. The web provides us with a platform to take a step towards addressing this question through online elicitation. We design a series of elicitation interfaces, which we release as \texttt{HILL MixE Suite}, and recruit 159 participants, to provide perceptual judgments over the kinds of synthetic data constructed during \textit{mixup} training: a powerful regularizer shown to improve model robustness, generalization, and calibration. We find that human perception does not consistently align with the labels traditionally used for synthetic points and begin to demonstrate the applicability of these findings to potentially increase the reliability of downstream models. We release all elicited judgments in a new data hub we call \texttt{H-Mix}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge