VOLT: Improving Vocabularization via Optimal Transport for Machine Translation

Paper and Code

Dec 31, 2020

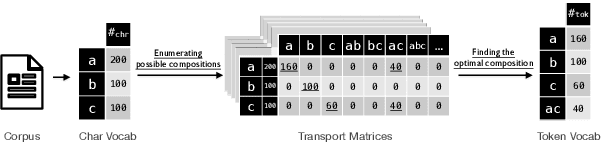

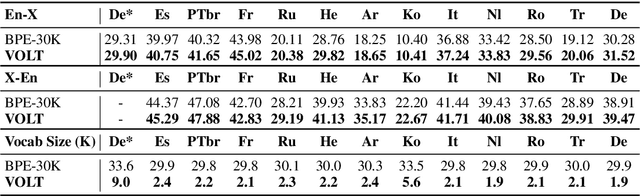

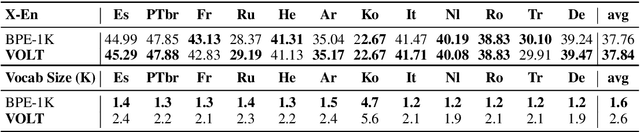

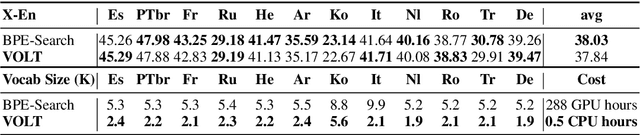

It is well accepted that the choice of token vocabulary largely affects the performance of machine translation. However, due to expensive trial costs, most studies only conduct simple trials with dominant approaches (e.g BPE) and commonly used vocabulary sizes. In this paper, we find an exciting relation between an information-theoretic feature and BLEU scores. With this observation, we formulate the quest of vocabularization -- finding the best token dictionary with a proper size -- as an optimal transport problem. We then propose VOLT, a simple and efficient vocabularization solution without the full and costly trial training. We evaluate our approach on multiple machine translation tasks, including WMT-14 English-German translation, TED bilingual translation, and TED multilingual translation. Empirical results show that VOLT beats widely-used vocabularies on diverse scenarios. For example, VOLT achieves 70% vocabulary size reduction and 0.6 BLEU gain on English-German translation. Also, one advantage of VOLT lies in its low resource consumption. Compared to naive BPE-search, VOLT reduces the search time from 288 GPU hours to 0.5 CPU hours.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge