Unveiling the Ambiguity in Neural Inverse Rendering: A Parameter Compensation Analysis

Paper and Code

Apr 19, 2024

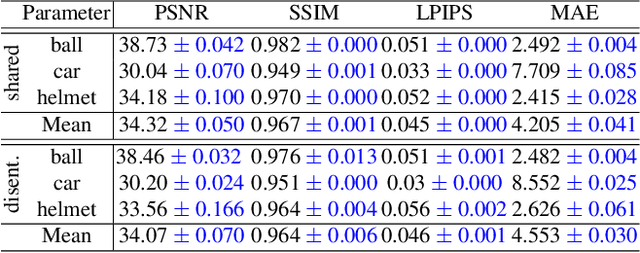

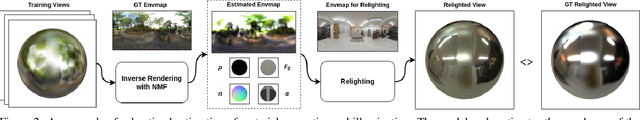

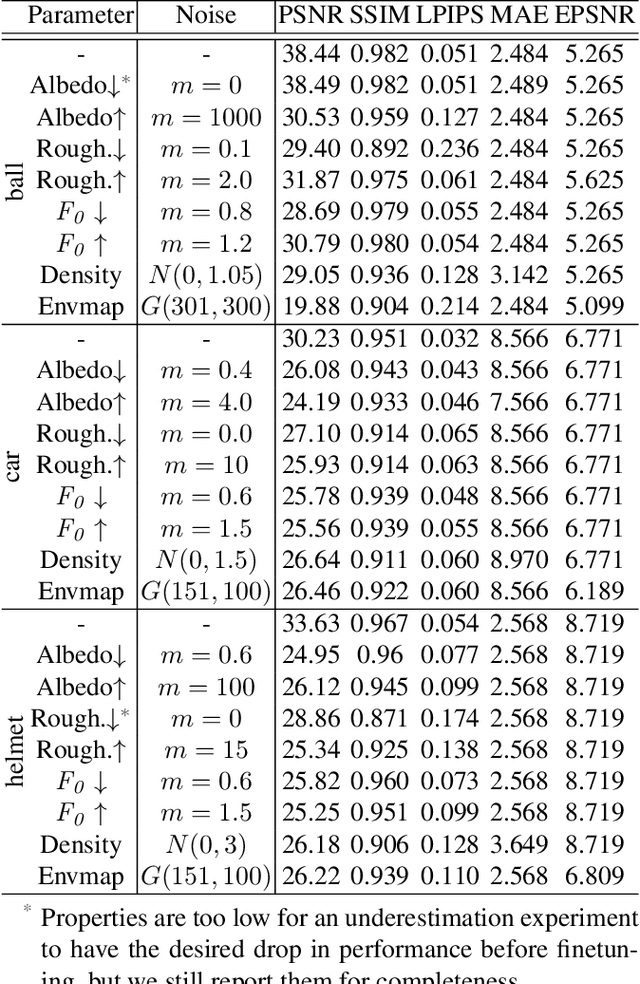

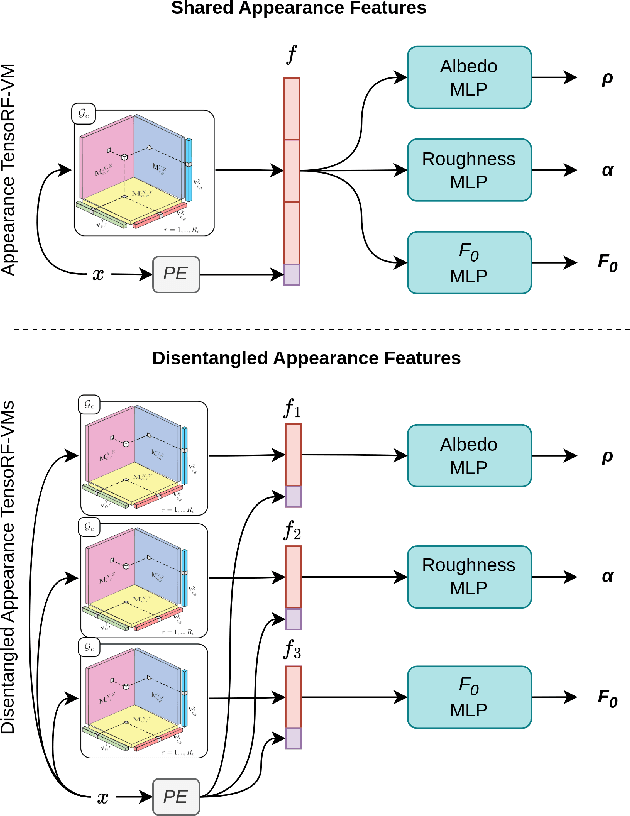

Inverse rendering aims to reconstruct the scene properties of objects solely from multiview images. However, it is an ill-posed problem prone to producing ambiguous estimations deviating from physically accurate representations. In this paper, we utilize Neural Microfacet Fields (NMF), a state-of-the-art neural inverse rendering method to illustrate the inherent ambiguity. We propose an evaluation framework to assess the degree of compensation or interaction between the estimated scene properties, aiming to explore the mechanisms behind this ill-posed problem and potential mitigation strategies. Specifically, we introduce artificial perturbations to one scene property and examine how adjusting another property can compensate for these perturbations. To facilitate such experiments, we introduce a disentangled NMF where material properties are independent. The experimental findings underscore the intrinsic ambiguity present in neural inverse rendering and highlight the importance of providing additional guidance through geometry, material, and illumination priors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge