Unsupervised Anomaly Detection with Local-Sensitive VQVAE and Global-Sensitive Transformers

Paper and Code

Mar 29, 2023

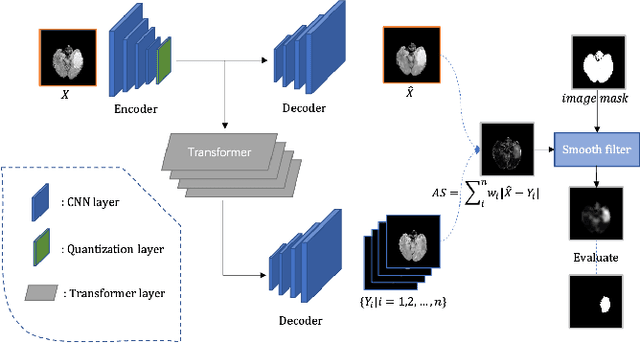

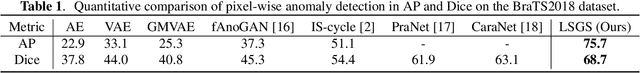

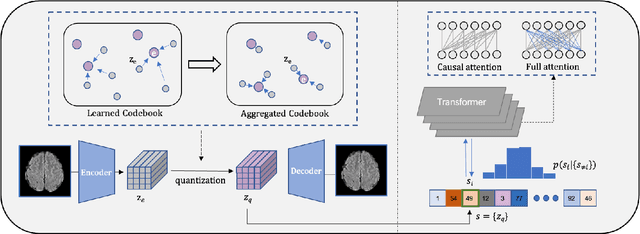

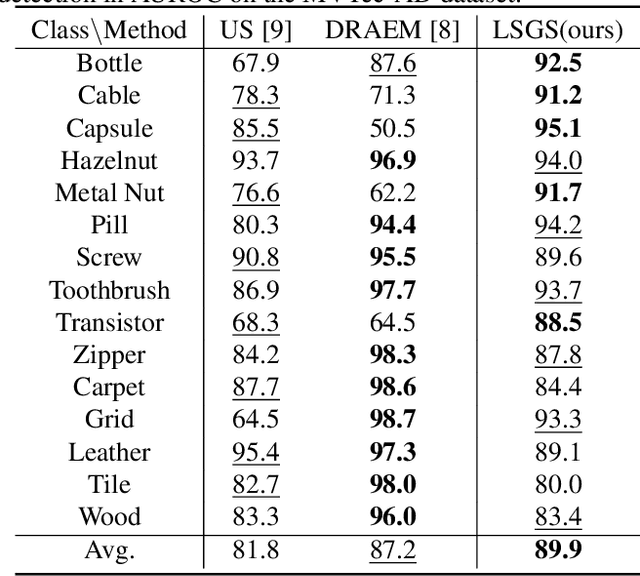

Unsupervised anomaly detection (UAD) has been widely implemented in industrial and medical applications, which reduces the cost of manual annotation and improves efficiency in disease diagnosis. Recently, deep auto-encoder with its variants has demonstrated its advantages in many UAD scenarios. Training on the normal data, these models are expected to locate anomalies by producing higher reconstruction error for the abnormal areas than the normal ones. However, this assumption does not always hold because of the uncontrollable generalization capability. To solve this problem, we present LSGS, a method that builds on Vector Quantised-Variational Autoencoder (VQVAE) with a novel aggregated codebook and transformers with global attention. In this work, the VQVAE focus on feature extraction and reconstruction of images, and the transformers fit the manifold and locate anomalies in the latent space. Then, leveraging the generated encoding sequences that conform to a normal distribution, we can reconstruct a more accurate image for locating the anomalies. Experiments on various datasets demonstrate the effectiveness of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge