Unlimited Road-scene Synthetic Annotation (URSA) Dataset

Paper and Code

Jul 16, 2018

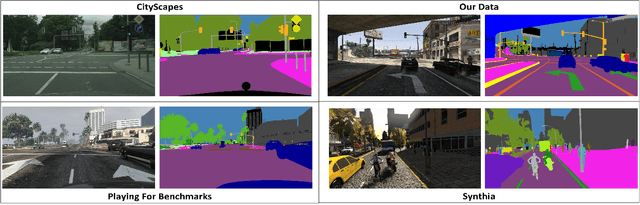

In training deep neural networks for semantic segmentation, the main limiting factor is the low amount of ground truth annotation data that is available in currently existing datasets. The limited availability of such data is due to the time cost and human effort required to accurately and consistently label real images on a pixel level. Modern sandbox video game engines provide open world environments where traffic and pedestrians behave in a pseudo-realistic manner. This caters well to the collection of a believable road-scene dataset. Utilizing open-source tools and resources found in single-player modding communities, we provide a method for persistent, ground truth, asset annotation of a game world. By collecting a synthetic dataset containing upwards of $1,000,000$ images, we demonstrate real-time, on-demand, ground truth data annotation capability of our method. Supplementing this synthetic data to Cityscapes dataset, we show that our data generation method provides qualitative as well as quantitative improvements---for training networks---over previous methods that use video games as surrogate.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge