Type-supervised sequence labeling based on the heterogeneous star graph for named entity recognition

Paper and Code

Oct 19, 2022

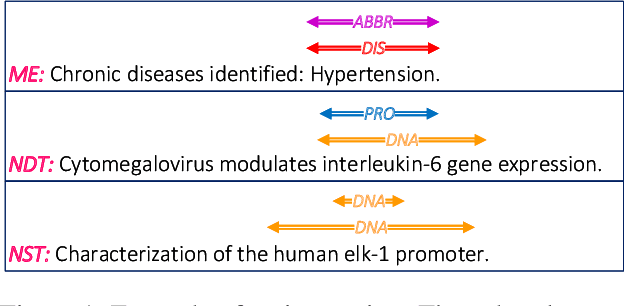

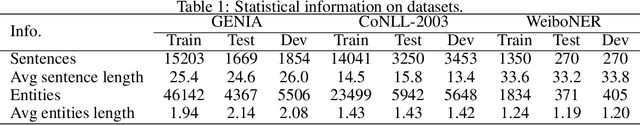

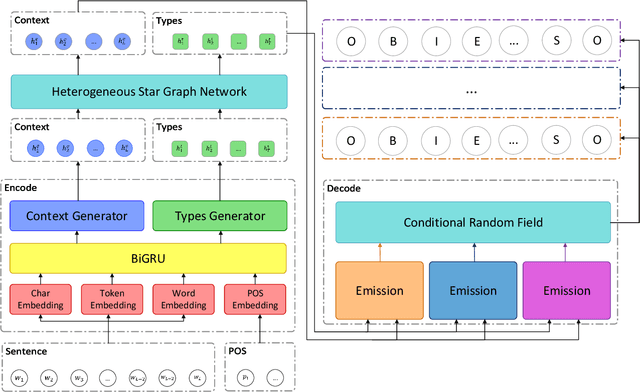

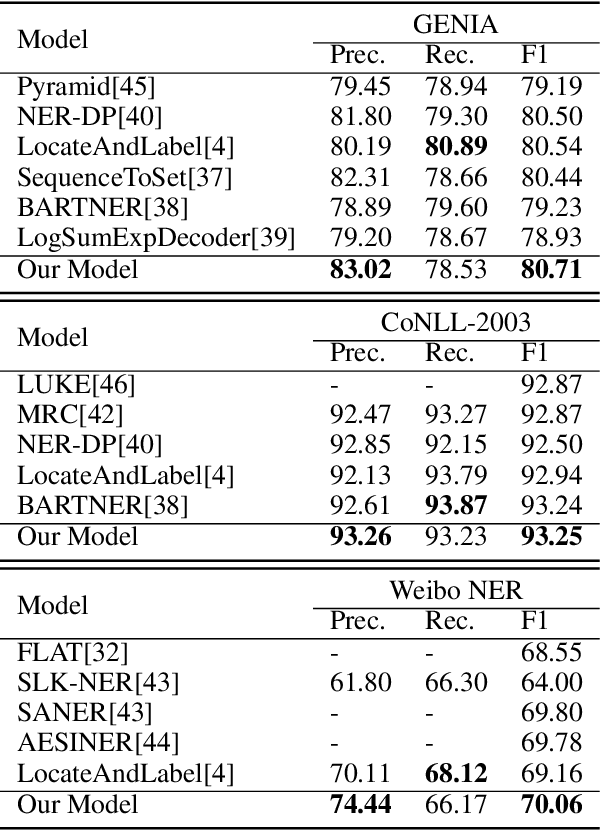

Named entity recognition is a fundamental task in natural language processing, identifying the span and category of entities in unstructured texts. The traditional sequence labeling methodology ignores the nested entities, i.e. entities included in other entity mentions. Many approaches attempt to address this scenario, most of which rely on complex structures or have high computation complexity. The representation learning of the heterogeneous star graph containing text nodes and type nodes is investigated in this paper. In addition, we revise the graph attention mechanism into a hybrid form to address its unreasonableness in specific topologies. The model performs the type-supervised sequence labeling after updating nodes in the graph. The annotation scheme is an extension of the single-layer sequence labeling and is able to cope with the vast majority of nested entities. Extensive experiments on public NER datasets reveal the effectiveness of our model in extracting both flat and nested entities. The method achieved state-of-the-art performance on both flat and nested datasets. The significant improvement in accuracy reflects the superiority of the multi-layer labeling strategy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge