Trust Region Based Adversarial Attack on Neural Networks

Paper and Code

Dec 16, 2018

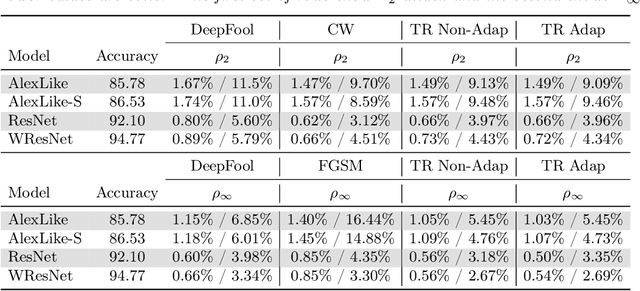

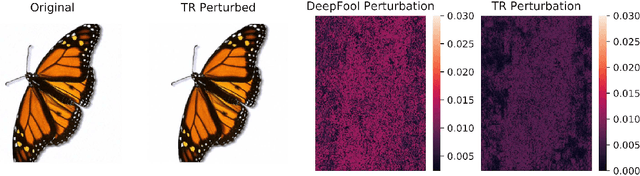

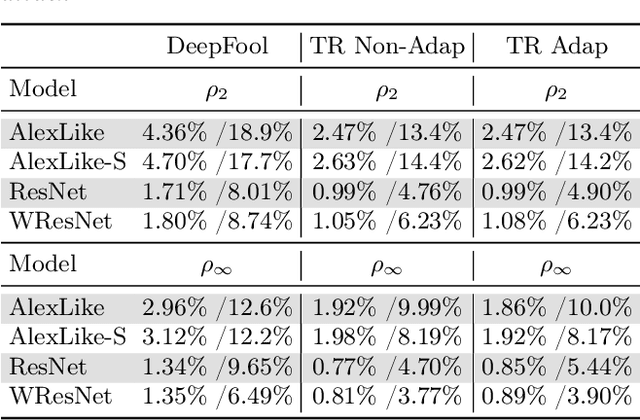

Deep Neural Networks are quite vulnerable to adversarial perturbations. Current state-of-the-art adversarial attack methods typically require very time consuming hyper-parameter tuning, or require many iterations to solve an optimization based adversarial attack. To address this problem, we present a new family of trust region based adversarial attacks, with the goal of computing adversarial perturbations efficiently. We propose several attacks based on variants of the trust region optimization method. We test the proposed methods on Cifar-10 and ImageNet datasets using several different models including AlexNet, ResNet-50, VGG-16, and DenseNet-121 models. Our methods achieve comparable results with the Carlini-Wagner (CW) attack, but with significant speed up of up to $37\times$, for the VGG-16 model on a Titan Xp GPU. For the case of ResNet-50 on ImageNet, we can bring down its classification accuracy to less than 0.1\% with at most $1.5\%$ relative $L_\infty$ (or $L_2$) perturbation requiring only $1.02$ seconds as compared to $27.04$ seconds for the CW attack. We have open sourced our method which can be accessed at [1].

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge