Trajectory Optimization for Coordinated Human-Robot Collaboration

Paper and Code

Oct 10, 2019

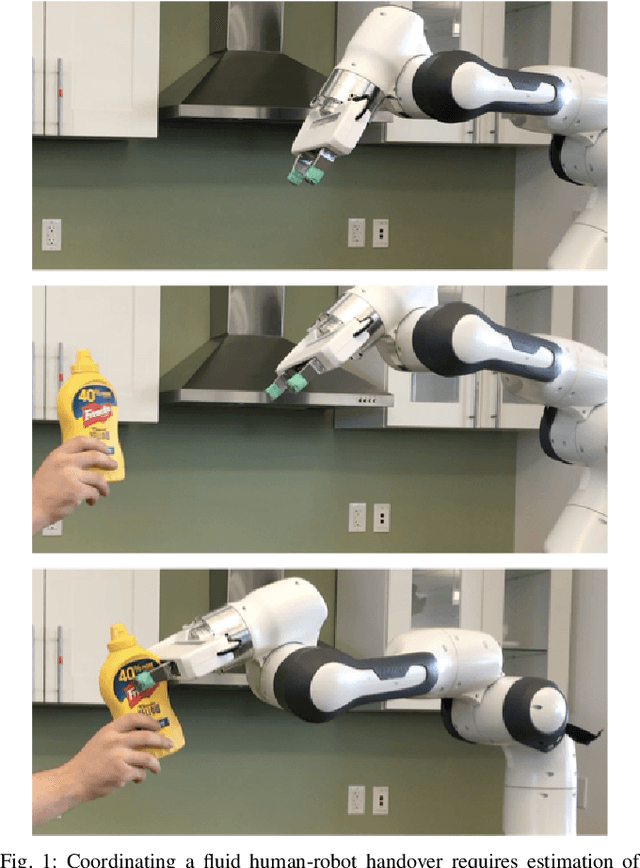

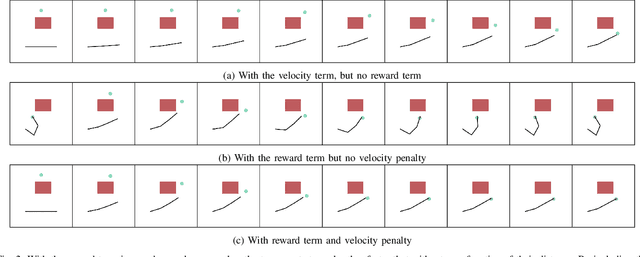

Effective human-robot collaboration requires informed anticipation. The robot must simultaneously anticipate what the human will do and react both instantaneously and fluidly when its predictions are wrong. Even more, the robot must plan its own actions in a way that accounts for the human predictions but also with the knowledge that the human's own behavior will change based on what the robot does. This back-and-forth game of prediction and planning is extremely difficult to model well using standard techniques. In this work, we exploit the duality between behavior prediction and control explored in the Inverse Optimal Control (IOC) literature to design a novel Model Predictive Control (MPC) algorithm that simultaneously plans the robot's behavior and predicts the human's behavior in a joint optimal control model. In the process, we develop a novel technique for bridging finite-horizon motion optimizers to the problem of spatially consistent continuous optimization using explicit sparse reward terms, i.e., negative cost. We demonstrate the framework on a collection of cooperative human-robot handover experiments in both simulation and with a real-world handover scenario.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge