Towards Healing the Blindness of Score Matching

Paper and Code

Sep 15, 2022

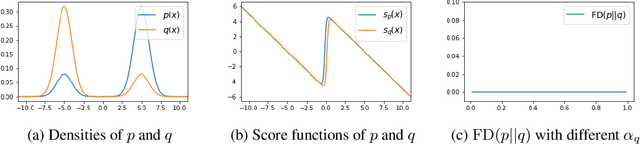

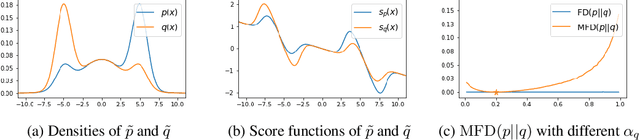

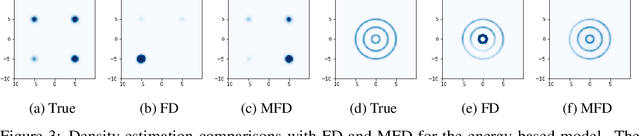

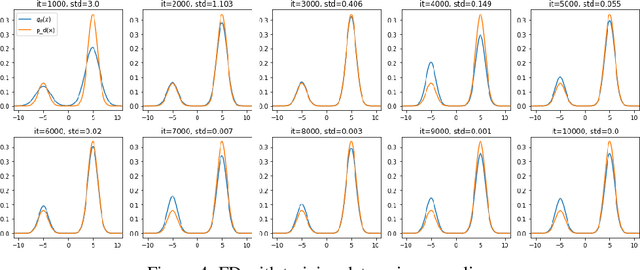

Score-based divergences have been widely used in machine learning and statistics applications. Despite their empirical success, a blindness problem has been observed when using these for multi-modal distributions. In this work, we discuss the blindness problem and propose a new family of divergences that can mitigate the blindness problem. We illustrate our proposed divergence in the context of density estimation and report improved performance compared to traditional approaches.

View paper on

OpenReview

OpenReview

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge