Towards Generalizable Neural Solvers for Vehicle Routing Problems via Ensemble with Transferrable Local Policy

Paper and Code

Aug 27, 2023

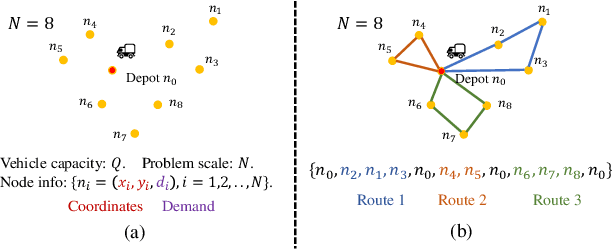

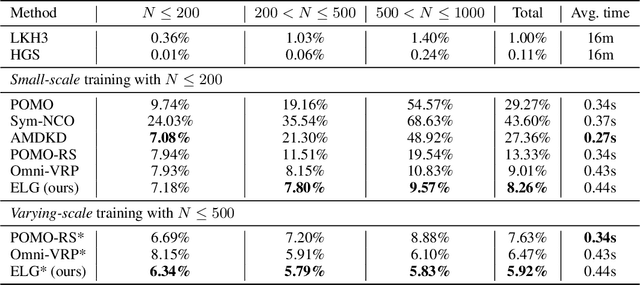

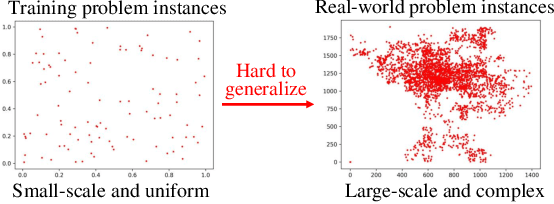

Machine learning has been adapted to help solve NP-hard combinatorial optimization problems. One prevalent way is learning to construct solutions by deep neural networks, which has been receiving more and more attention due to the high efficiency and less requirement for expert knowledge. However, many neural construction methods for Vehicle Routing Problems (VRPs) focus on synthetic problem instances with limited scales and specified node distributions, leading to poor performance on real-world problems which usually involve large scales together with complex and unknown node distributions. To make neural VRP solvers more practical in real-world scenarios, we design an auxiliary policy that learns from the local transferable topological features, named local policy, and integrate it with a typical constructive policy (which learns from the global information of VRP instances) to form an ensemble policy. With joint training, the aggregated policies perform cooperatively and complementarily to boost generalization. The experimental results on two well-known benchmarks, TSPLIB and CVRPLIB, of travelling salesman problem and capacitated VRP show that the ensemble policy consistently achieves better generalization than state-of-the-art construction methods and even works well on real-world problems with several thousand nodes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge