Towards Context-Aware Emotion Recognition Debiasing from a Causal Demystification Perspective via De-confounded Training

Paper and Code

Jul 06, 2024

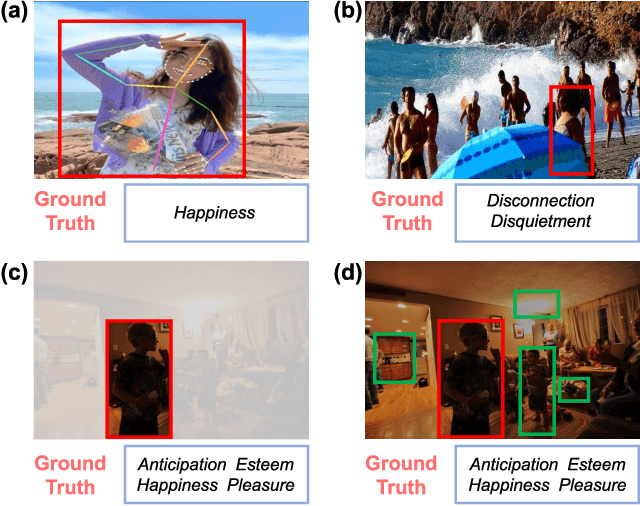

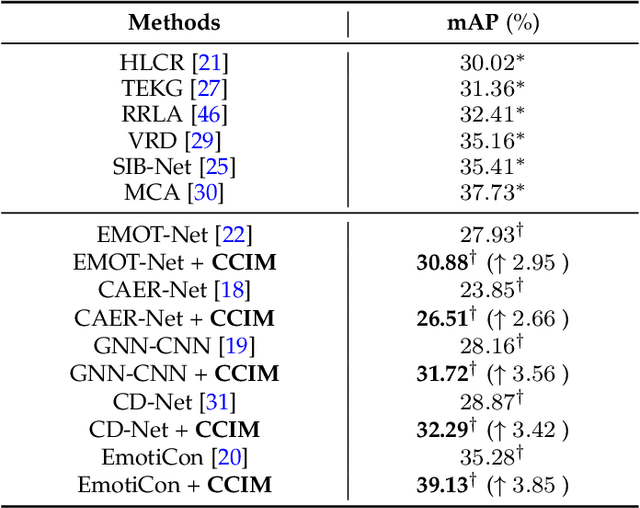

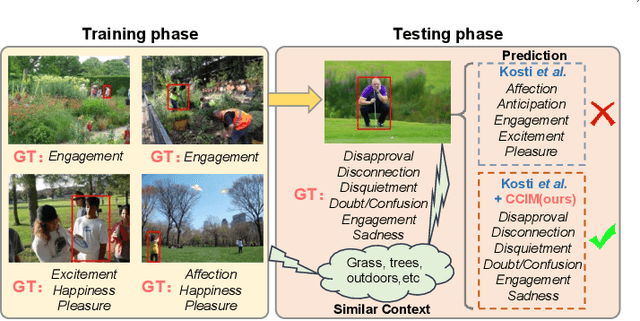

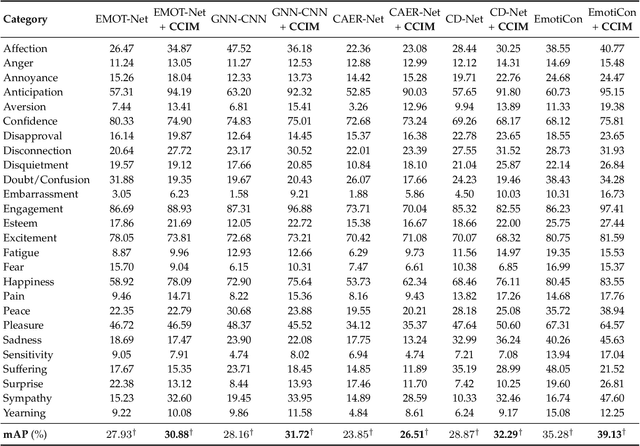

Understanding emotions from diverse contexts has received widespread attention in computer vision communities. The core philosophy of Context-Aware Emotion Recognition (CAER) is to provide valuable semantic cues for recognizing the emotions of target persons by leveraging rich contextual information. Current approaches invariably focus on designing sophisticated structures to extract perceptually critical representations from contexts. Nevertheless, a long-neglected dilemma is that a severe context bias in existing datasets results in an unbalanced distribution of emotional states among different contexts, causing biased visual representation learning. From a causal demystification perspective, the harmful bias is identified as a confounder that misleads existing models to learn spurious correlations based on likelihood estimation, limiting the models' performance. To address the issue, we embrace causal inference to disentangle the models from the impact of such bias, and formulate the causalities among variables in the CAER task via a customized causal graph. Subsequently, we present a Contextual Causal Intervention Module (CCIM) to de-confound the confounder, which is built upon backdoor adjustment theory to facilitate seeking approximate causal effects during model training. As a plug-and-play component, CCIM can easily integrate with existing approaches and bring significant improvements. Systematic experiments on three datasets demonstrate the effectiveness of our CCIM.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge