Towards Benchmarking and Evaluating Deepfake Detection

Paper and Code

Mar 04, 2022

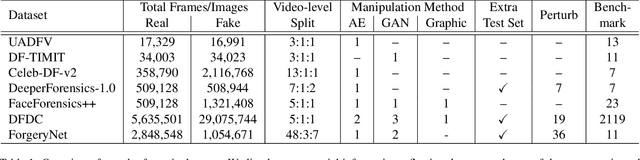

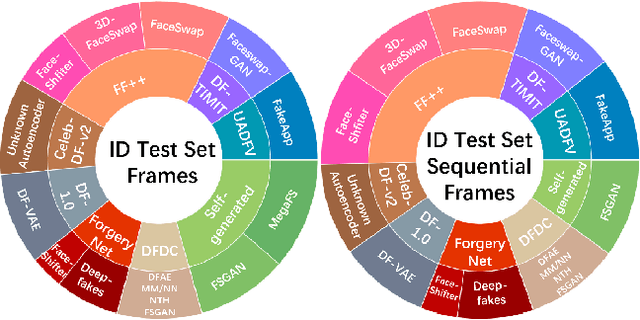

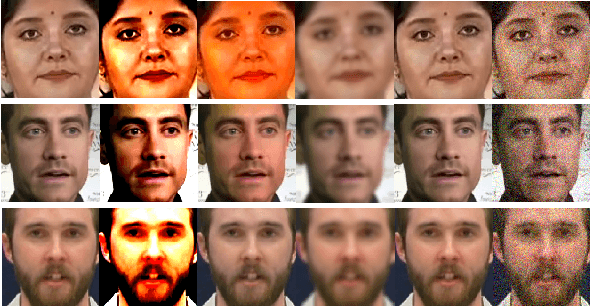

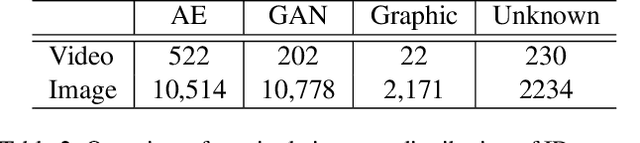

Deepfake detection automatically recognizes the manipulated medias through the analysis of the difference between manipulated and non-altered videos. It is natural to ask which are the top performers among the existing deepfake detection approaches to identify promising research directions and provide practical guidance. Unfortunately, it's difficult to conduct a sound benchmarking comparison of existing detection approaches using the results in the literature because evaluation conditions are inconsistent across studies. Our objective is to establish a comprehensive and consistent benchmark, to develop a repeatable evaluation procedure, and to measure the performance of a range of detection approaches so that the results can be compared soundly. A challenging dataset consisting of the manipulated samples generated by more than 13 different methods has been collected, and 11 popular detection approaches (9 algorithms) from the existing literature have been implemented and evaluated with 6 fair-minded and practical evaluation metrics. Finally, 92 models have been trained and 644 experiments have been performed for the evaluation. The results along with the shared data and evaluation methodology constitute a benchmark for comparing deepfake detection approaches and measuring progress.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge