Toward Designing Convergent Deep Operator Splitting Methods for Task-specific Nonconvex Optimization

Paper and Code

Apr 28, 2018

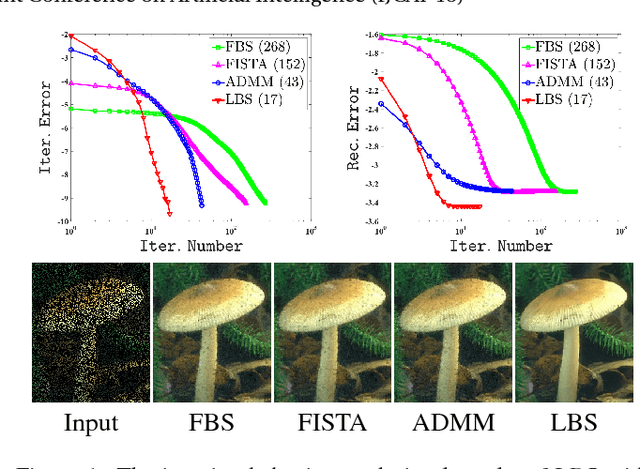

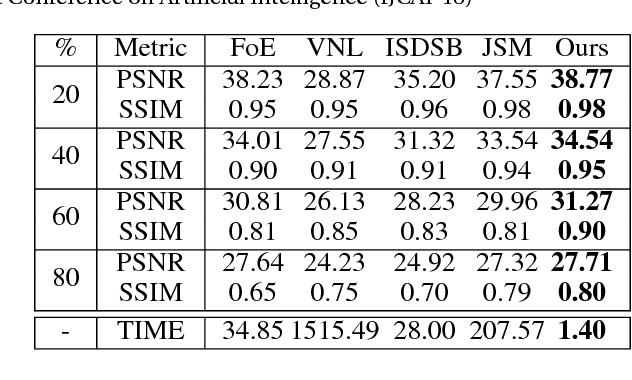

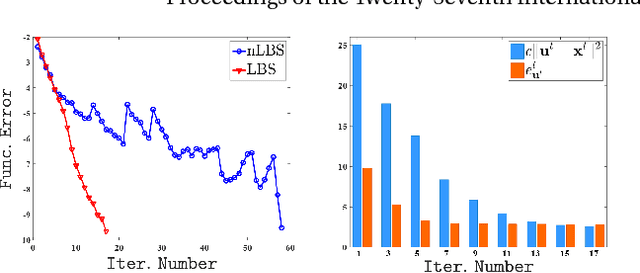

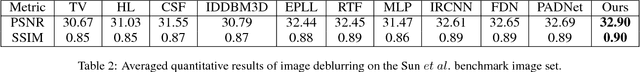

Operator splitting methods have been successfully used in computational sciences, statistics, learning and vision areas to reduce complex problems into a series of simpler subproblems. However, prevalent splitting schemes are mostly established only based on the mathematical properties of some general optimization models. So it is a laborious process and often requires many iterations of ideation and validation to obtain practical and task-specific optimal solutions, especially for nonconvex problems in real-world scenarios. To break through the above limits, we introduce a new algorithmic framework, called Learnable Bregman Splitting (LBS), to perform deep-architecture-based operator splitting for nonconvex optimization based on specific task model. Thanks to the data-dependent (i.e., learnable) nature, our LBS can not only speed up the convergence, but also avoid unwanted trivial solutions for real-world tasks. Though with inexact deep iterations, we can still establish the global convergence and estimate the asymptotic convergence rate of LBS only by enforcing some fairly loose assumptions. Extensive experiments on different applications (e.g., image completion and deblurring) verify our theoretical results and show the superiority of LBS against existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge