Texture Synthesis with Recurrent Variational Auto-Encoder

Paper and Code

Dec 23, 2017

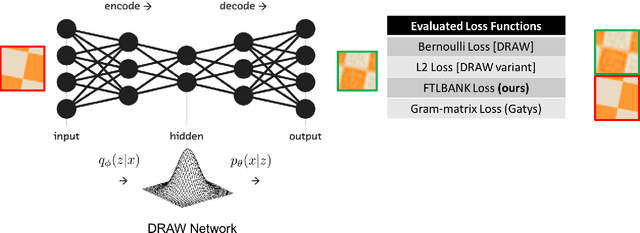

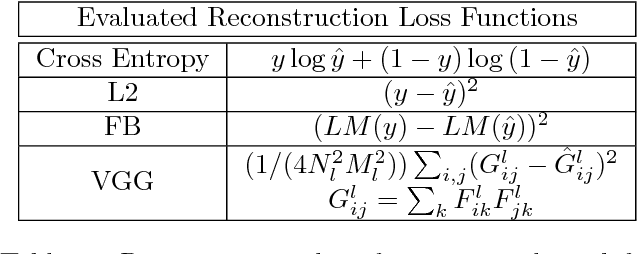

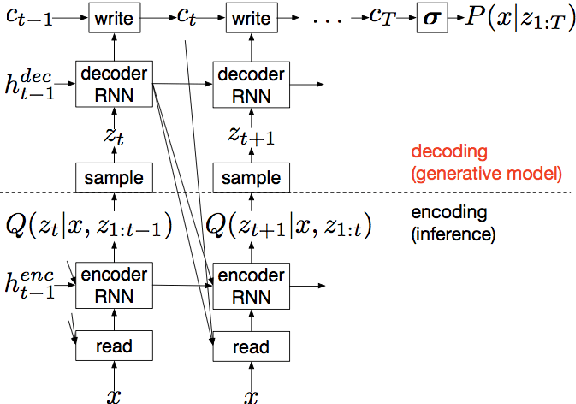

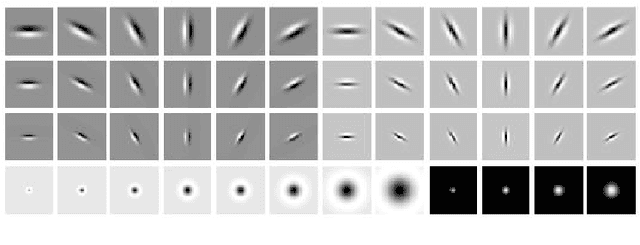

We propose a recurrent variational auto-encoder for texture synthesis. A novel loss function, FLTBNK, is used for training the texture synthesizer. It is rotational and partially color invariant loss function. Unlike L2 loss, FLTBNK explicitly models the correlation of color intensity between pixels. Our texture synthesizer generates neighboring tiles to expand a sample texture and is evaluated using various texture patterns from Describable Textures Dataset (DTD). We perform both quantitative and qualitative experiments with various loss functions to evaluate the performance of our proposed loss function (FLTBNK) --- a mini-human subject study is used for the qualitative evaluation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge