Testing the Safety of Self-driving Vehicles by Simulating Perception and Prediction

Paper and Code

Aug 13, 2020

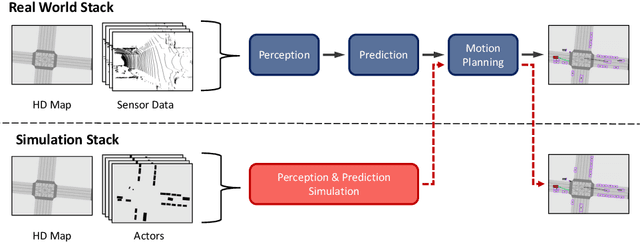

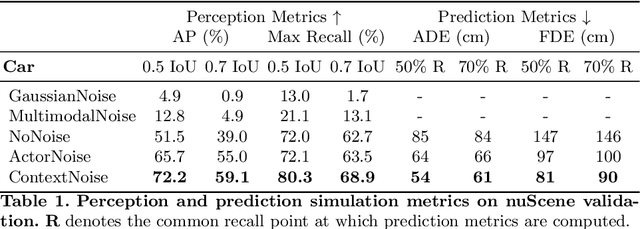

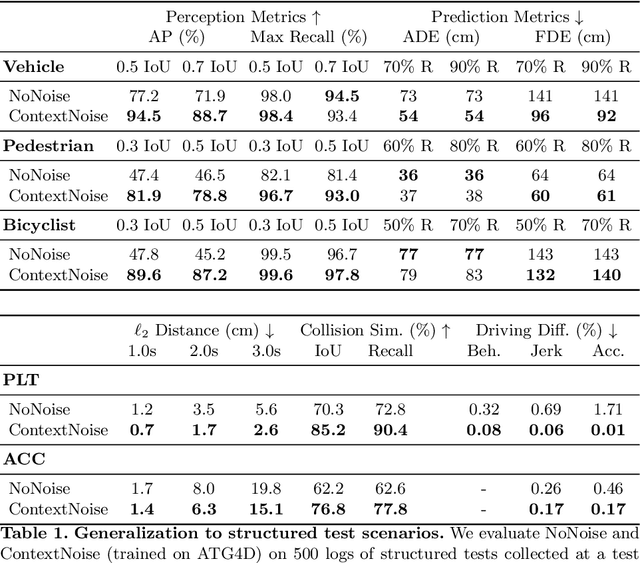

We present a novel method for testing the safety of self-driving vehicles in simulation. We propose an alternative to sensor simulation, as sensor simulation is expensive and has large domain gaps. Instead, we directly simulate the outputs of the self-driving vehicle's perception and prediction system, enabling realistic motion planning testing. Specifically, we use paired data in the form of ground truth labels and real perception and prediction outputs to train a model that predicts what the online system will produce. Importantly, the inputs to our system consists of high definition maps, bounding boxes, and trajectories, which can be easily sketched by a test engineer in a matter of minutes. This makes our approach a much more scalable solution. Quantitative results on two large-scale datasets demonstrate that we can realistically test motion planning using our simulations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge