Test-time Unsupervised Domain Adaptation

Paper and Code

Oct 05, 2020

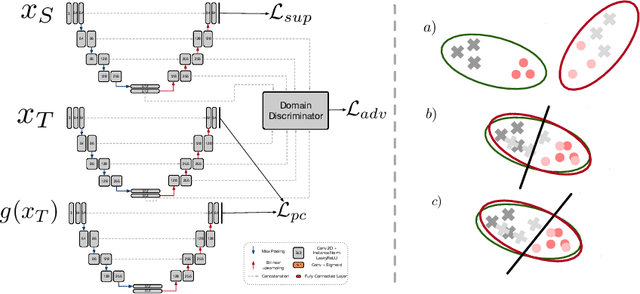

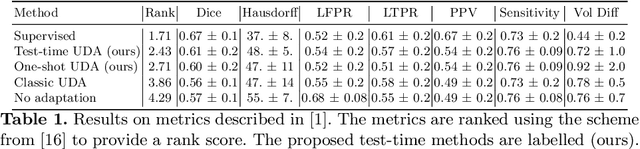

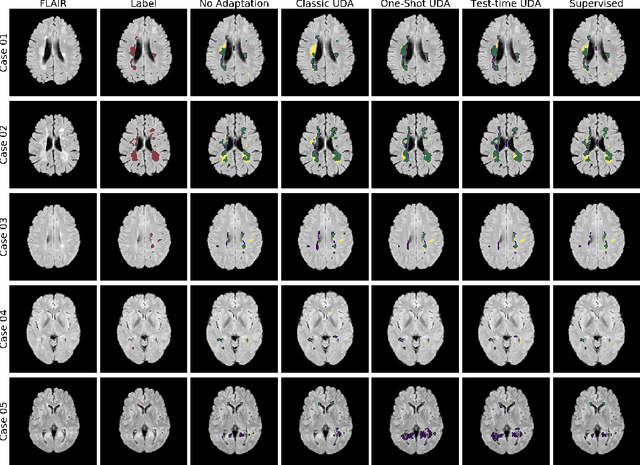

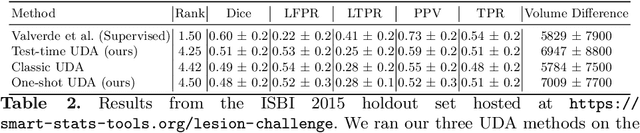

Convolutional neural networks trained on publicly available medical imaging datasets (source domain) rarely generalise to different scanners or acquisition protocols (target domain). This motivates the active field of domain adaptation. While some approaches to the problem require labeled data from the target domain, others adopt an unsupervised approach to domain adaptation (UDA). Evaluating UDA methods consists of measuring the model's ability to generalise to unseen data in the target domain. In this work, we argue that this is not as useful as adapting to the test set directly. We therefore propose an evaluation framework where we perform test-time UDA on each subject separately. We show that models adapted to a specific target subject from the target domain outperform a domain adaptation method which has seen more data of the target domain but not this specific target subject. This result supports the thesis that unsupervised domain adaptation should be used at test-time, even if only using a single target-domain subject

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge