Temporarily-Aware Context Modelling using Generative Adversarial Networks for Speech Activity Detection

Paper and Code

Apr 02, 2020

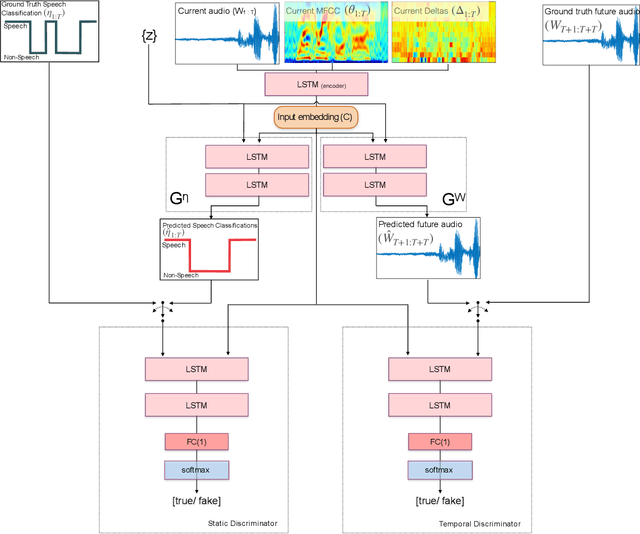

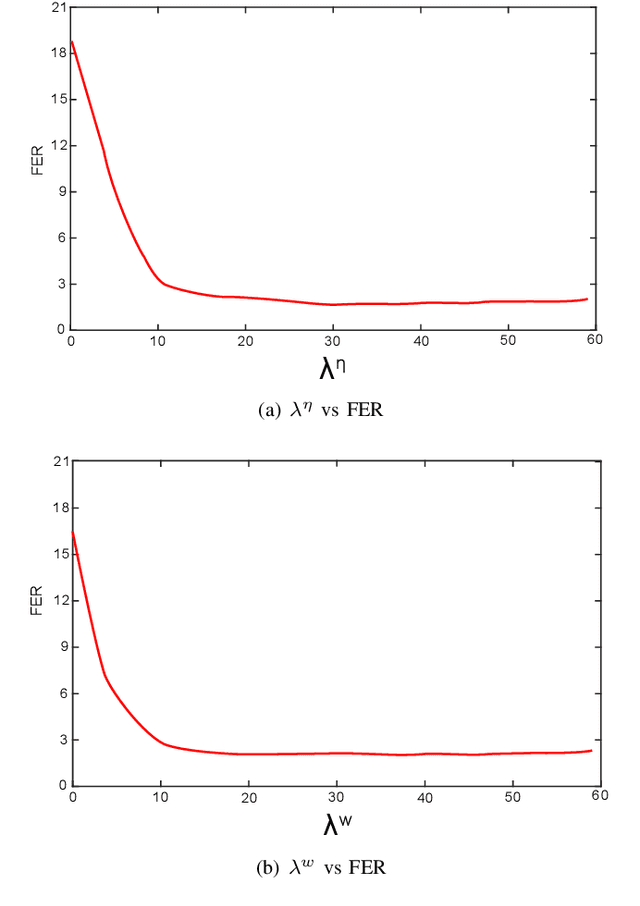

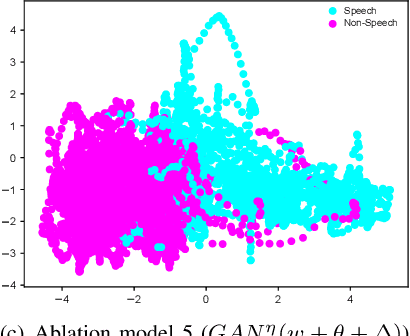

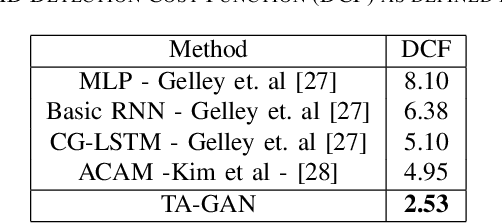

This paper presents a novel framework for Speech Activity Detection (SAD). Inspired by the recent success of multi-task learning approaches in the speech processing domain, we propose a novel joint learning framework for SAD. We utilise generative adversarial networks to automatically learn a loss function for joint prediction of the frame-wise speech/ non-speech classifications together with the next audio segment. In order to exploit the temporal relationships within the input signal, we propose a temporal discriminator which aims to ensure that the predicted signal is temporally consistent. We evaluate the proposed framework on multiple public benchmarks, including NIST OpenSAT' 17, AMI Meeting and HAVIC, where we demonstrate its capability to outperform state-of-the-art SAD approaches. Furthermore, our cross-database evaluations demonstrate the robustness of the proposed approach across different languages, accents, and acoustic environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge