SynthSeg: Domain Randomisation for Segmentation of Brain MRI Scans of any Contrast and Resolution

Paper and Code

Jul 20, 2021

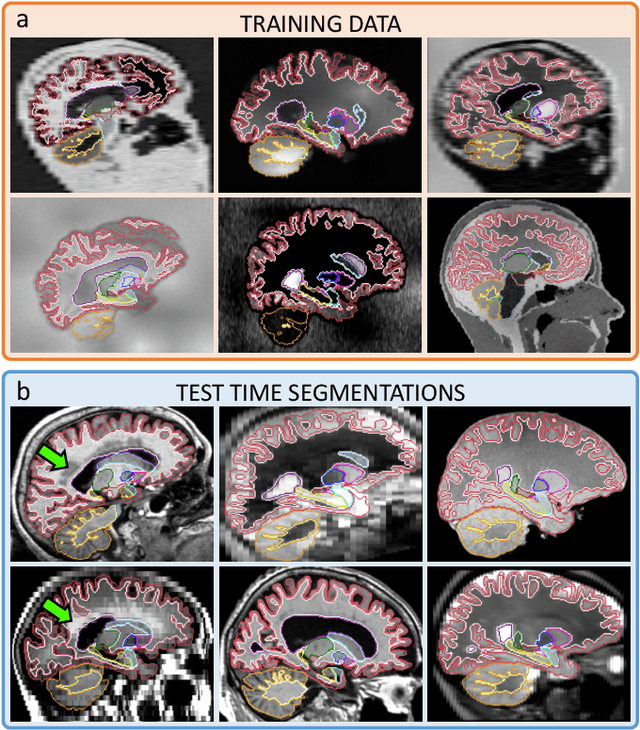

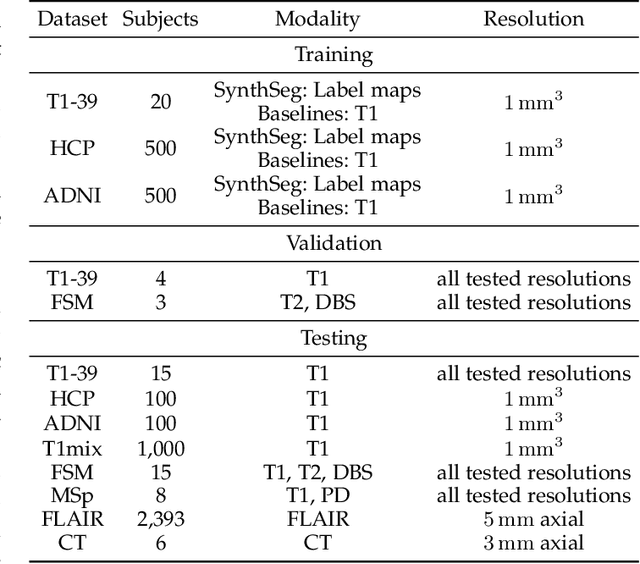

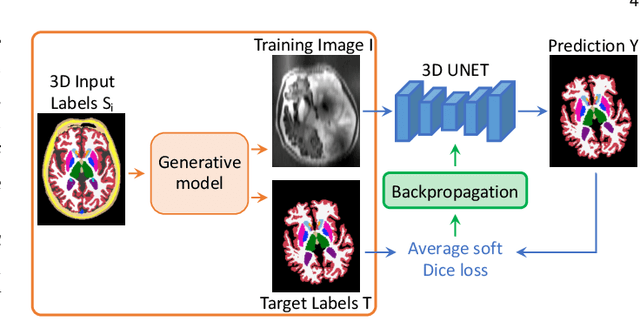

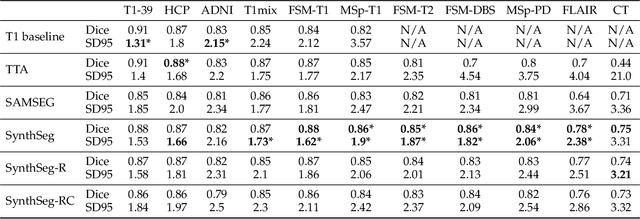

Despite advances in data augmentation and transfer learning, convolutional neural networks (CNNs) have difficulties generalising to unseen target domains. When applied to segmentation of brain MRI scans, CNNs are highly sensitive to changes in resolution and contrast: even within the same MR modality, decreases in performance can be observed across datasets. We introduce SynthSeg, the first segmentation CNN agnostic to brain MRI scans of any contrast and resolution. SynthSeg is trained with synthetic data sampled from a generative model inspired by Bayesian segmentation. Crucially, we adopt a \textit{domain randomisation} strategy where we fully randomise the generation parameters to maximise the variability of the training data. Consequently, SynthSeg can segment preprocessed and unpreprocessed real scans of any target domain, without retraining or fine-tuning. Because SynthSeg only requires segmentations to be trained (no images), it can learn from label maps obtained automatically from existing datasets of different populations (e.g., with atrophy and lesions), thus achieving robustness to a wide range of morphological variability. We demonstrate SynthSeg on 5,500 scans of 6 modalities and 10 resolutions, where it exhibits unparalleled generalisation compared to supervised CNNs, test time adaptation, and Bayesian segmentation. The code and trained model are available at https://github.com/BBillot/SynthSeg.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge