Spatio-Temporal-Decoupled Masked Pre-training for Traffic Forecasting

Paper and Code

Dec 01, 2023

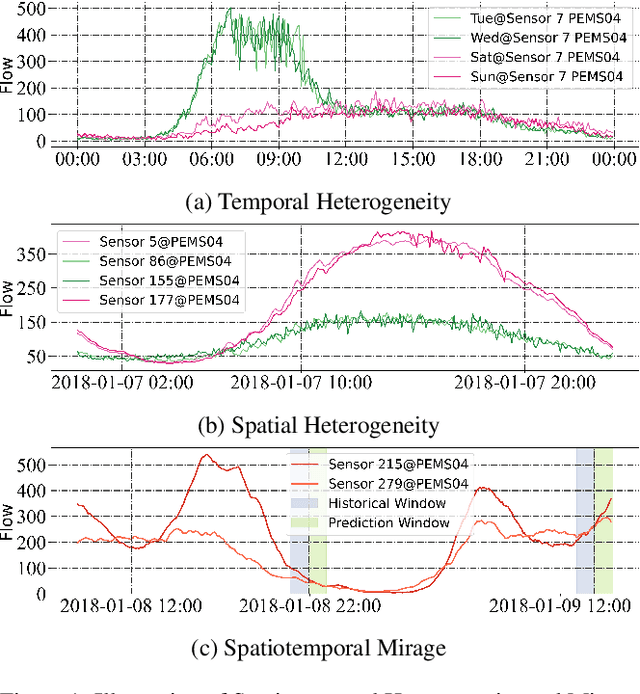

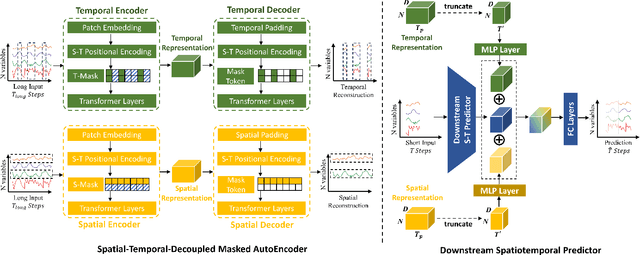

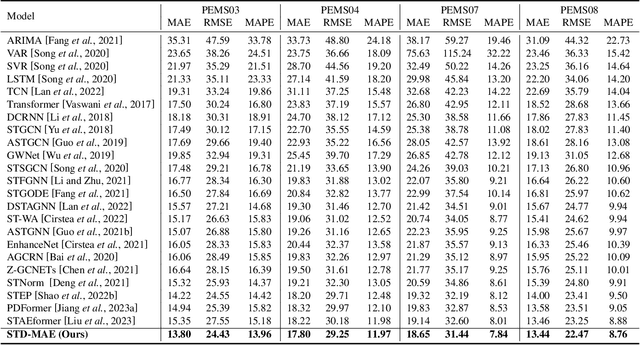

Accurate forecasting of multivariate traffic flow time series remains challenging due to substantial spatio-temporal heterogeneity and complex long-range correlative patterns. To address this, we propose Spatio-Temporal-Decoupled Masked Pre-training (STD-MAE), a novel framework that employs masked autoencoders to learn and encode complex spatio-temporal dependencies via pre-training. Specifically, we use two decoupled masked autoencoders to reconstruct the traffic data along spatial and temporal axes using a self-supervised pre-training approach. These mask reconstruction mechanisms capture the long-range correlations in space and time separately. The learned hidden representations are then used to augment the downstream spatio-temporal traffic predictor. A series of quantitative and qualitative evaluations on four widely-used traffic benchmarks (PEMS03, PEMS04, PEMS07, and PEMS08) are conducted to verify the state-of-the-art performance, with STD-MAE explicitly enhancing the downstream spatio-temporal models' ability to capture long-range intricate spatial and temporal patterns. Codes are available at https://github.com/Jimmy-7664/STD_MAE.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge