Sparse Visual Counterfactual Explanations in Image Space

Paper and Code

May 16, 2022

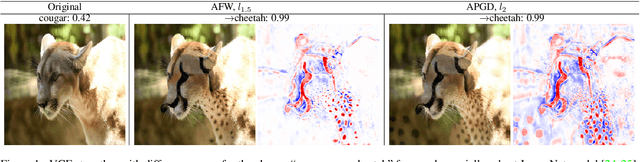

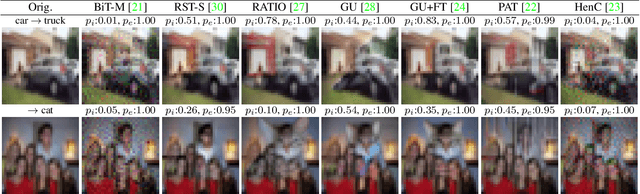

Visual counterfactual explanations (VCEs) in image space are an important tool to understand decisions of image classifiers as they show under which changes of the image the decision of the classifier would change. Their generation in image space is challenging and requires robust models due to the problem of adversarial examples. Existing techniques to generate VCEs in image space suffer from spurious changes in the background. Our novel perturbation model for VCEs together with its efficient optimization via our novel Auto-Frank-Wolfe scheme yields sparse VCEs which are significantly more object-centric. Moreover, we show that VCEs can be used to detect undesired behavior of ImageNet classifiers due to spurious features in the ImageNet dataset and discuss how estimates of the data-generating distribution can be used for VCEs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge