Skeleton Driven Non-rigid Motion Tracking and 3D Reconstruction

Paper and Code

Oct 09, 2018

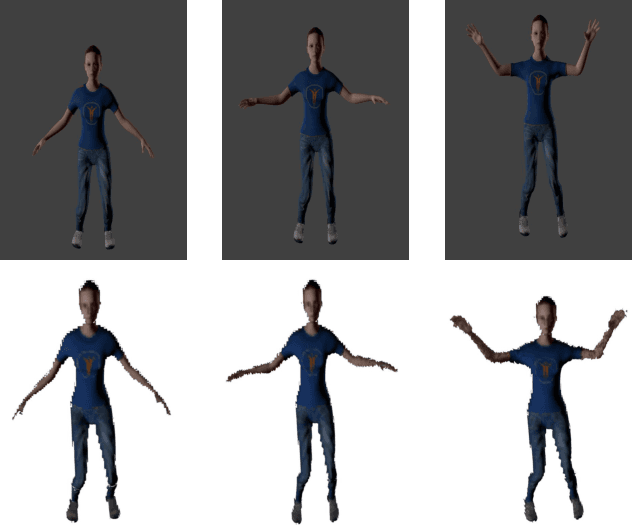

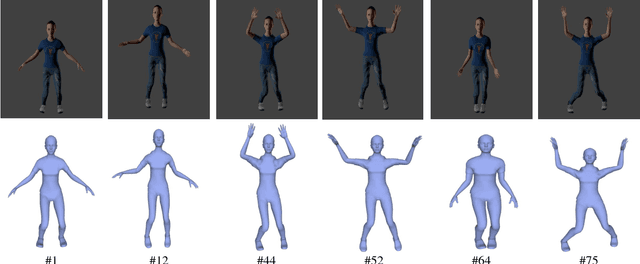

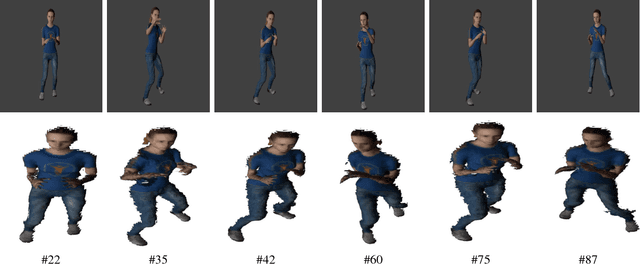

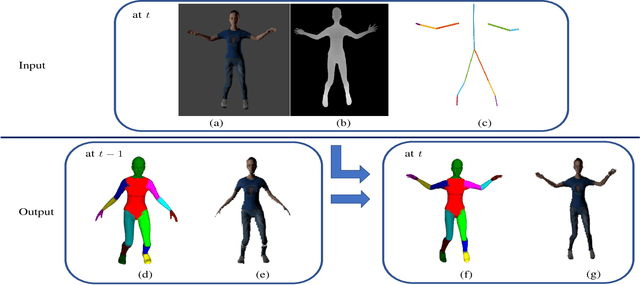

This paper presents a method which can track and 3D reconstruct the non-rigid surface motion of human performance using a moving RGB-D camera. 3D reconstruction of marker-less human performance is a challenging problem due to the large range of articulated motions and considerable non-rigid deformations. Current approaches use local optimization for tracking. These methods need many iterations to converge and may get stuck in local minima during sudden articulated movements. We propose a puppet model-based tracking approach using skeleton prior, which provides a better initialization for tracking articulated movements. The proposed approach uses an aligned puppet model to estimate correct correspondences for human performance capture. We also contribute a synthetic dataset which provides ground truth locations for frame-by-frame geometry and skeleton joints of human subjects. Experimental results show that our approach is more robust when faced with sudden articulated motions, and provides better 3D reconstruction compared to the existing state-of-the-art approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge