Shift-and-Balance Attention

Paper and Code

Mar 24, 2021

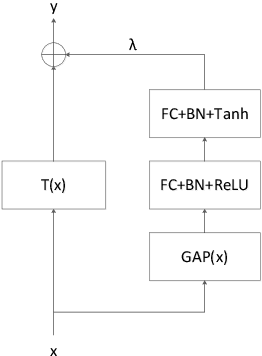

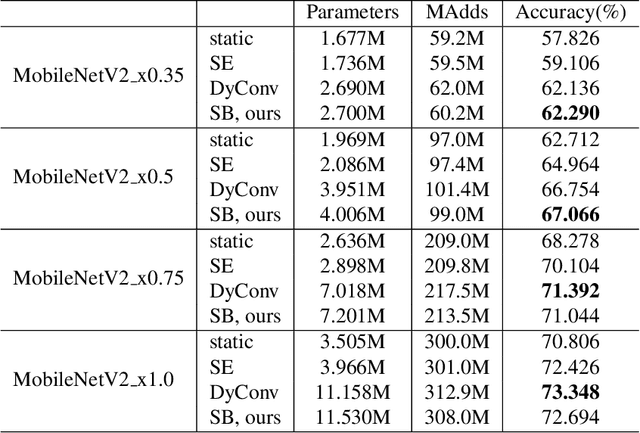

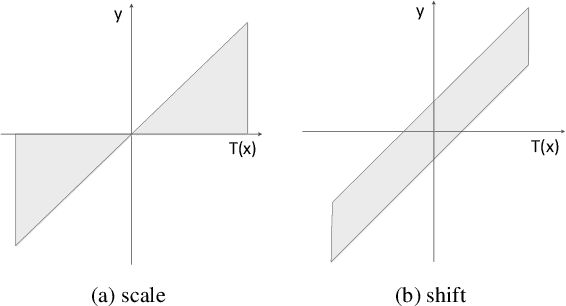

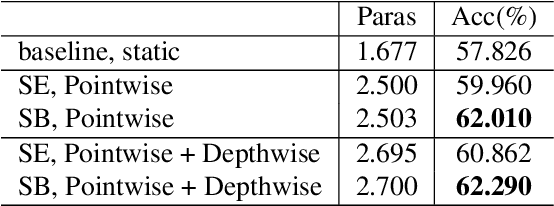

Attention is an effective mechanism to improve the deep model capability. Squeeze-and-Excite (SE) introduces a light-weight attention branch to enhance the network's representational power. The attention branch is gated using the Sigmoid function and multiplied by the feature map's trunk branch. It is too sensitive to coordinate and balance the trunk and attention branches' contributions. To control the attention branch's influence, we propose a new attention method, called Shift-and-Balance (SB). Different from Squeeze-and-Excite, the attention branch is regulated by the learned control factor to control the balance, then added into the feature map's trunk branch. Experiments show that Shift-and-Balance attention significantly improves the accuracy compared to Squeeze-and-Excite when applied in more layers, increasing more size and capacity of a network. Moreover, Shift-and-Balance attention achieves better or close accuracy compared to the state-of-art Dynamic Convolution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge