Sequence-level Semantic Representation Fusion for Recommender Systems

Paper and Code

Feb 28, 2024

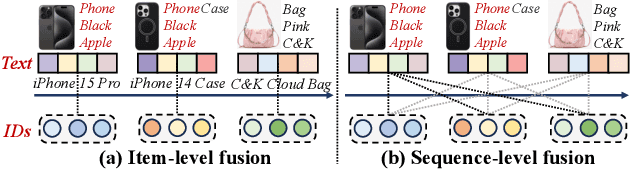

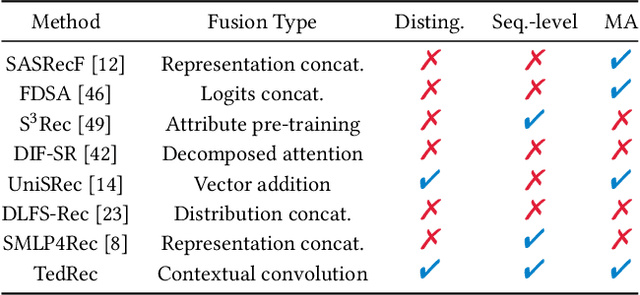

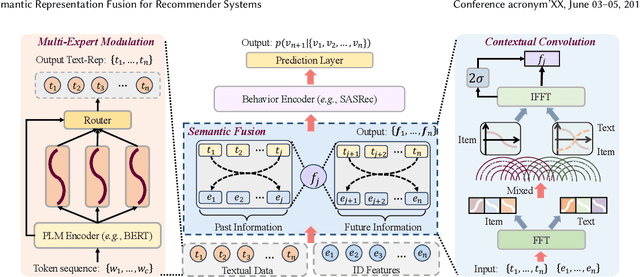

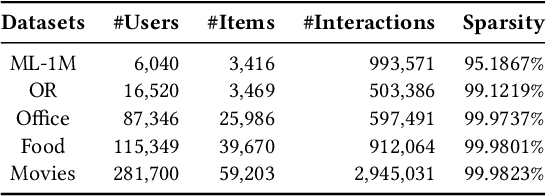

With the rapid development of recommender systems, there is increasing side information that can be employed to improve the recommendation performance. Specially, we focus on the utilization of the associated \emph{textual data} of items (eg product title) and study how text features can be effectively fused with ID features in sequential recommendation. However, there exists distinct data characteristics for the two kinds of item features, making a direct fusion method (eg adding text and ID embeddings as item representation) become less effective. To address this issue, we propose a novel {\ul \emph{Te}}xt-I{\ul \emph{D}} semantic fusion approach for sequential {\ul \emph{Rec}}ommendation, namely \textbf{\our}. The core idea of our approach is to conduct a sequence-level semantic fusion approach by better integrating global contexts. The key strategy lies in that we transform the text embeddings and ID embeddings by Fourier Transform from \emph{time domain} to \emph{frequency domain}. In the frequency domain, the global sequential characteristics of the original sequences are inherently aggregated into the transformed representations, so that we can employ simple multiplicative operations to effectively fuse the two kinds of item features. Our fusion approach can be proved to have the same effects of contextual convolution, so as to achieving sequence-level semantic fusion. In order to further improve the fusion performance, we propose to enhance the discriminability of the text embeddings from the text encoder, by adaptively injecting positional information via a mixture-of-experts~(MoE) modulation method. Our implementation is available at this repository: \textcolor{magenta}{\url{https://github.com/RUCAIBox/TedRec}}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge