Sentence-Level BERT and Multi-Task Learning of Age and Gender in Social Media

Paper and Code

Nov 02, 2019

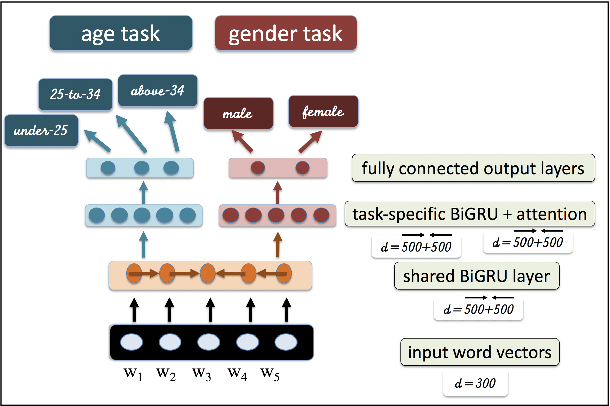

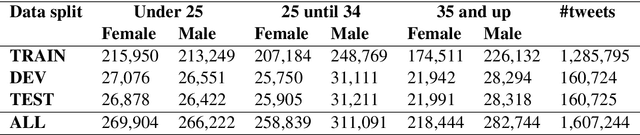

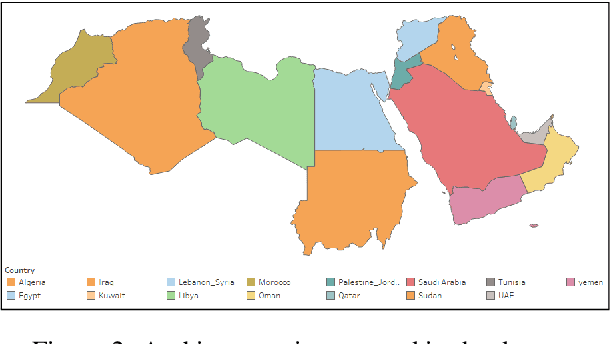

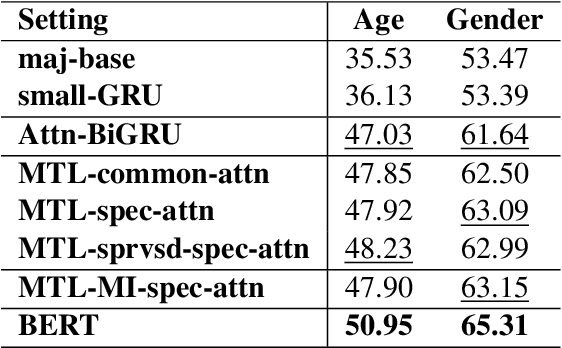

Social media currently provide a window on our lives, making it possible to learn how people from different places, with different backgrounds, ages, and genders use language. In this work we exploit a newly-created Arabic dataset with ground truth age and gender labels to learn these attributes both individually and in a multi-task setting at the sentence level. Our models are based on variations of deep bidirectional neural networks. More specifically, we build models with gated recurrent units and bidirectional encoder representations from transformers (BERT). We show the utility of multi-task learning (MTL) on the two tasks and identify task-specific attention as a superior choice in this context. We also find that a single-task BERT model outperform our best MTL models on the two tasks. We report tweet-level accuracy of 51.43% for the age task (three-way) and 65.30% on the gender task (binary), both of which outperforms our baselines with a large margin. Our models are language-agnostic, and so can be applied to other languages.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge