Sensitivity-Aware Amortized Bayesian Inference

Paper and Code

Oct 23, 2023

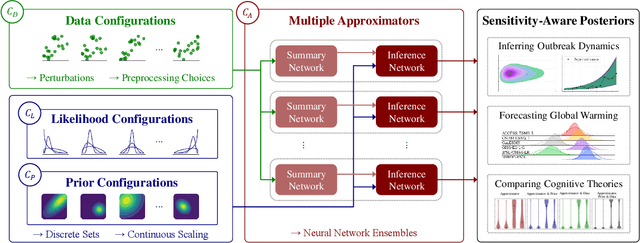

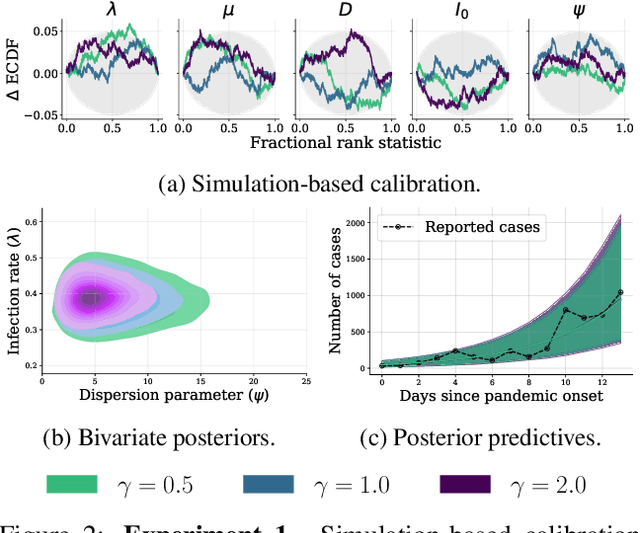

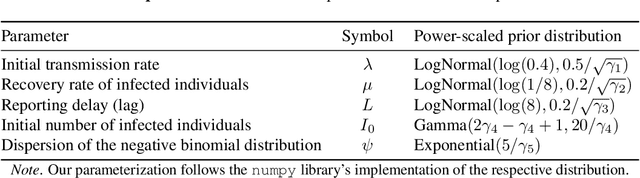

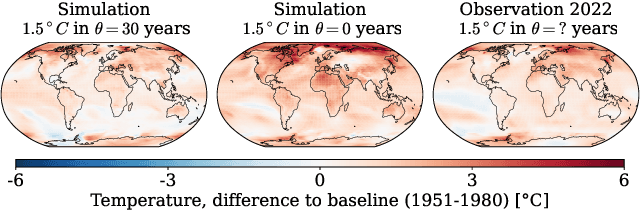

Bayesian inference is a powerful framework for making probabilistic inferences and decisions under uncertainty. Fundamental choices in modern Bayesian workflows concern the specification of the likelihood function and prior distributions, the posterior approximator, and the data. Each choice can significantly influence model-based inference and subsequent decisions, thereby necessitating sensitivity analysis. In this work, we propose a multifaceted approach to integrate sensitivity analyses into amortized Bayesian inference (ABI, i.e., simulation-based inference with neural networks). First, we utilize weight sharing to encode the structural similarities between alternative likelihood and prior specifications in the training process with minimal computational overhead. Second, we leverage the rapid inference of neural networks to assess sensitivity to various data perturbations or pre-processing procedures. In contrast to most other Bayesian approaches, both steps circumvent the costly bottleneck of refitting the model(s) for each choice of likelihood, prior, or dataset. Finally, we propose to use neural network ensembles to evaluate variation in results induced by unreliable approximation on unseen data. We demonstrate the effectiveness of our method in applied modeling problems, ranging from the estimation of disease outbreak dynamics and global warming thresholds to the comparison of human decision-making models. Our experiments showcase how our approach enables practitioners to effectively unveil hidden relationships between modeling choices and inferential conclusions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge