Semi-supervised Nonnegative Matrix Factorization for Document Classification

Paper and Code

Feb 28, 2022

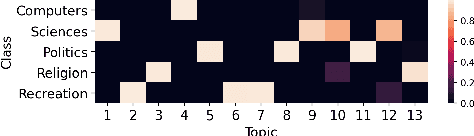

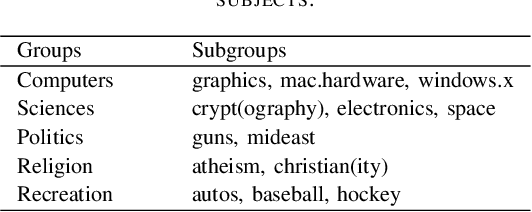

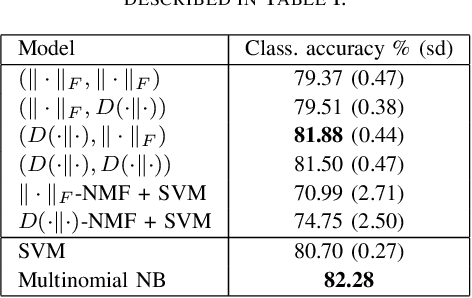

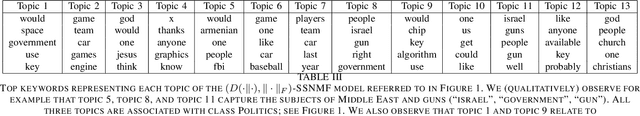

We propose new semi-supervised nonnegative matrix factorization (SSNMF) models for document classification and provide motivation for these models as maximum likelihood estimators. The proposed SSNMF models simultaneously provide both a topic model and a model for classification, thereby offering highly interpretable classification results. We derive training methods using multiplicative updates for each new model, and demonstrate the application of these models to single-label and multi-label document classification, although the models are flexible to other supervised learning tasks such as regression. We illustrate the promise of these models and training methods on document classification datasets (e.g., 20 Newsgroups, Reuters).

* arXiv admin note: substantial text overlap with arXiv:2010.07956

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge