Semantic Correlation Promoted Shape-Variant Context for Segmentation

Paper and Code

Sep 05, 2019

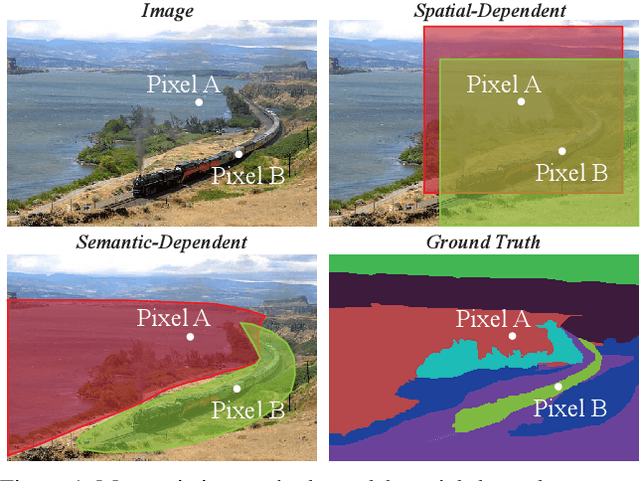

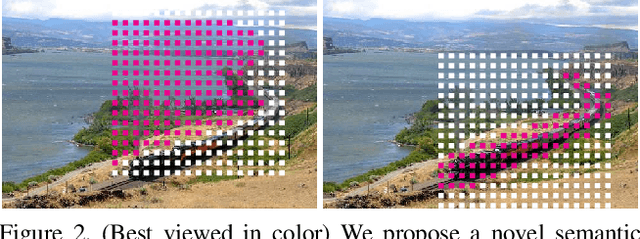

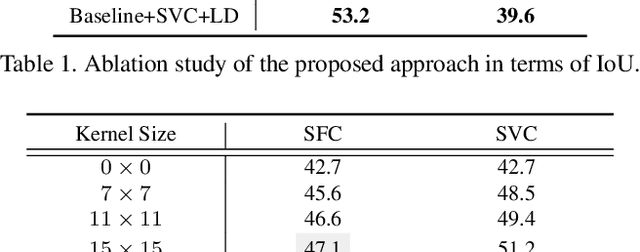

Context is essential for semantic segmentation. Due to the diverse shapes of objects and their complex layout in various scene images, the spatial scales and shapes of contexts for different objects have very large variation. It is thus ineffective or inefficient to aggregate various context information from a predefined fixed region. In this work, we propose to generate a scale- and shape-variant semantic mask for each pixel to confine its contextual region. To this end, we first propose a novel paired convolution to infer the semantic correlation of the pair and based on that to generate a shape mask. Using the inferred spatial scope of the contextual region, we propose a shape-variant convolution, of which the receptive field is controlled by the shape mask that varies with the appearance of input. In this way, the proposed network aggregates the context information of a pixel from its semantic-correlated region instead of a predefined fixed region. Furthermore, this work also proposes a labeling denoising model to reduce wrong predictions caused by the noisy low-level features. Without bells and whistles, the proposed segmentation network achieves new state-of-the-arts consistently on the six public segmentation datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge