Semantic Change Detection with Hypermaps

Paper and Code

Mar 16, 2017

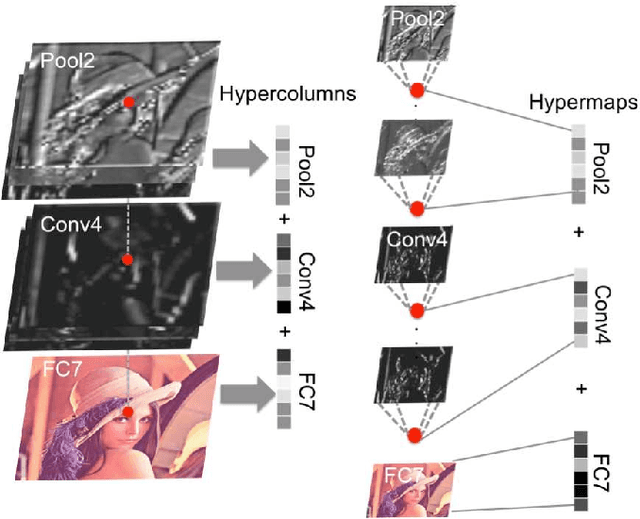

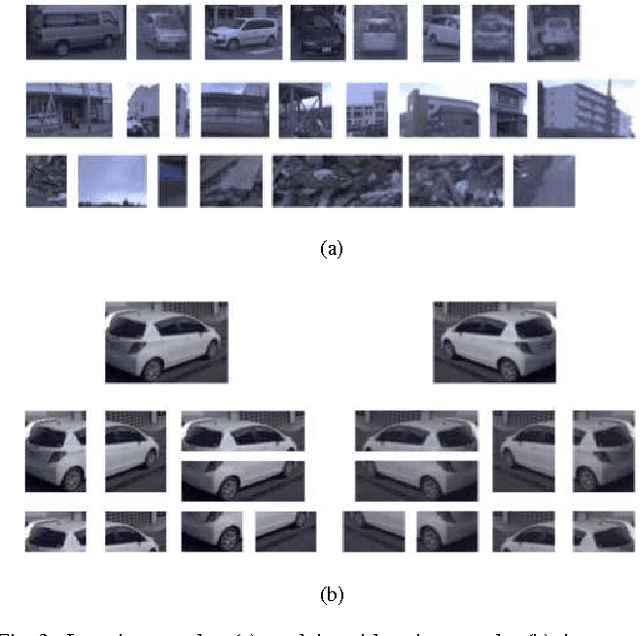

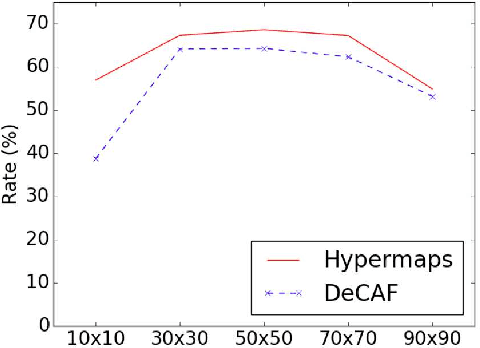

Change detection is the study of detecting changes between two different images of a scene taken at different times. By the detected change areas, however, a human cannot understand how different the two images. Therefore, a semantic understanding is required in the change detection research such as disaster investigation. The paper proposes the concept of semantic change detection, which involves intuitively inserting semantic meaning into detected change areas. We mainly focus on the novel semantic segmentation in addition to a conventional change detection approach. In order to solve this problem and obtain a high-level of performance, we propose an improvement to the hypercolumns representation, hereafter known as hypermaps, which effectively uses convolutional maps obtained from convolutional neural networks (CNNs). We also employ multi-scale feature representation captured by different image patches. We applied our method to the TSUNAMI Panoramic Change Detection dataset, and re-annotated the changed areas of the dataset via semantic classes. The results show that our multi-scale hypermaps provided outstanding performance on the re-annotated TSUNAMI dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge