Semantic-aware Consistency Network for Cloth-changing Person Re-Identification

Paper and Code

Aug 27, 2023

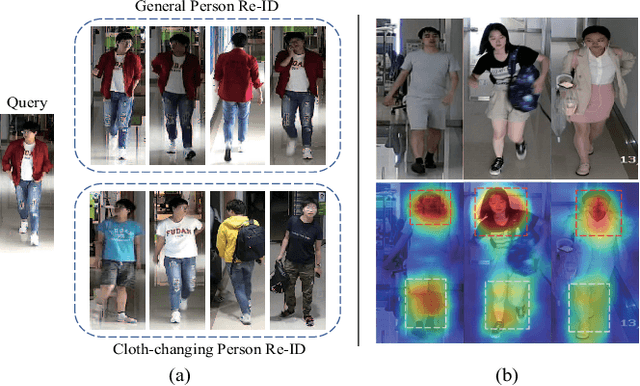

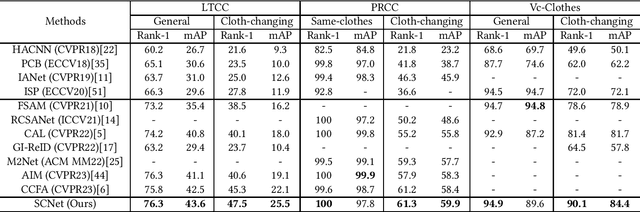

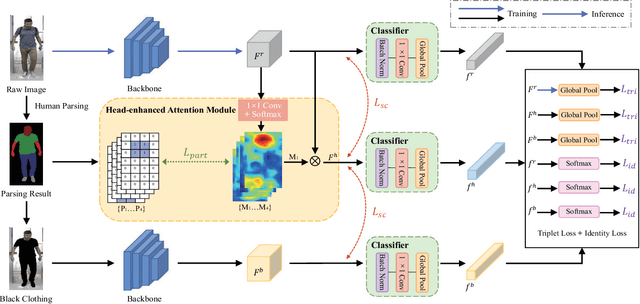

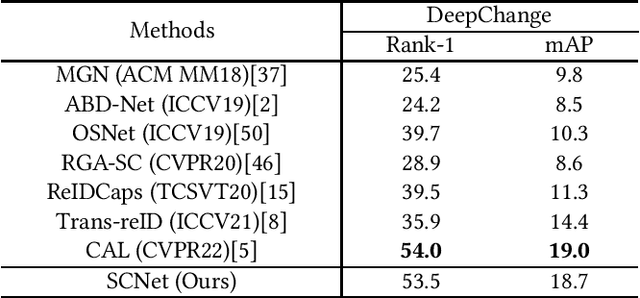

Cloth-changing Person Re-Identification (CC-ReID) is a challenging task that aims to retrieve the target person across multiple surveillance cameras when clothing changes might happen. Despite recent progress in CC-ReID, existing approaches are still hindered by the interference of clothing variations since they lack effective constraints to keep the model consistently focused on clothing-irrelevant regions. To address this issue, we present a Semantic-aware Consistency Network (SCNet) to learn identity-related semantic features by proposing effective consistency constraints. Specifically, we generate the black-clothing image by erasing pixels in the clothing area, which explicitly mitigates the interference from clothing variations. In addition, to fully exploit the fine-grained identity information, a head-enhanced attention module is introduced, which learns soft attention maps by utilizing the proposed part-based matching loss to highlight head information. We further design a semantic consistency loss to facilitate the learning of high-level identity-related semantic features, forcing the model to focus on semantically consistent cloth-irrelevant regions. By using the consistency constraint, our model does not require any extra auxiliary segmentation module to generate the black-clothing image or locate the head region during the inference stage. Extensive experiments on four cloth-changing person Re-ID datasets (LTCC, PRCC, Vc-Clothes, and DeepChange) demonstrate that our proposed SCNet makes significant improvements over prior state-of-the-art approaches. Our code is available at: https://github.com/Gpn-star/SCNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge