Self-organizing Democratized Learning: Towards Large-scale Distributed Learning Systems

Paper and Code

Jul 07, 2020

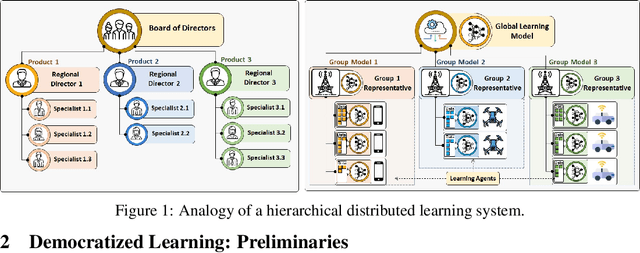

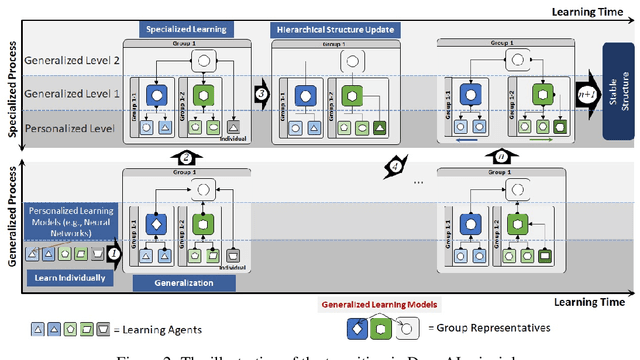

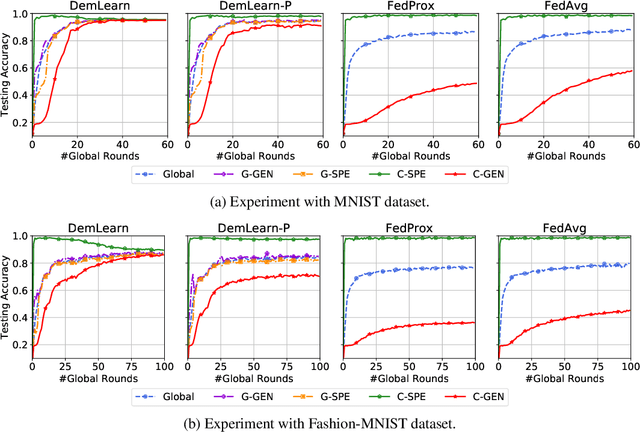

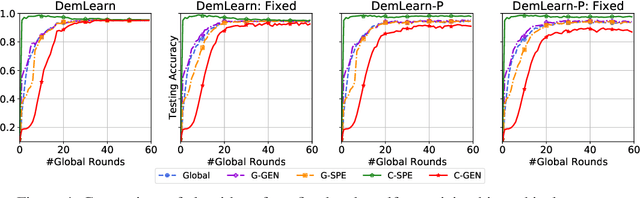

Emerging cross-device artificial intelligence (AI) applications require a transition from conventional centralized learning systems towards large-scale distributed AI systems that can collaboratively perform complex learning tasks. In this regard, democratized learning (Dem-AI) (Minh et al. 2020) lays out a holistic philosophy with underlying principles for building large-scale distributed and democratized machine learning systems. The outlined principles are meant to provide a generalization of distributed learning that goes beyond existing mechanisms such as federated learning. Inspired from this philosophy, a novel distributed learning approach is proposed in this paper. The approach consists of a self-organizing hierarchical structuring mechanism based on agglomerative clustering, hierarchical generalization, and corresponding learning mechanism. Subsequently, a hierarchical generalized learning problem in a recursive form is formulated and shown to be approximately solved using the solutions of distributed personalized learning problems and hierarchical generalized averaging mechanism. To that end, a distributed learning algorithm, namely DemLearn and its variant, DemLearn-P is proposed. Extensive experiments on benchmark MNIST and Fashion-MNIST datasets show that proposed algorithms demonstrate better results in the generalization performance of learning model at agents compared to the conventional FL algorithms. Detailed analysis provides useful configurations to further tune up both the generalization and specialization performance of the learning models in Dem-AI systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge