Saliency in Augmented Reality

Paper and Code

Apr 18, 2022

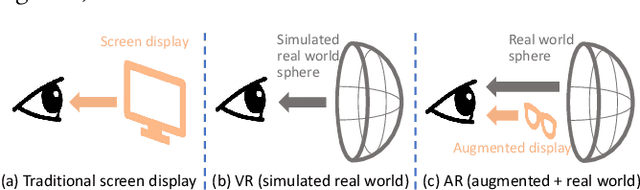

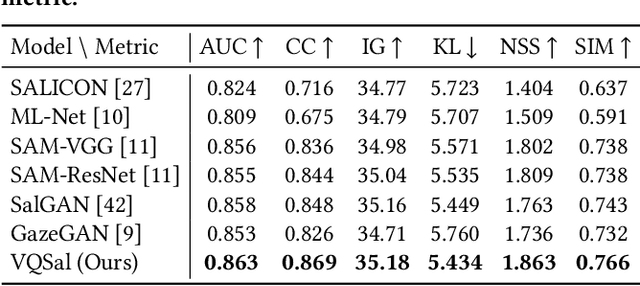

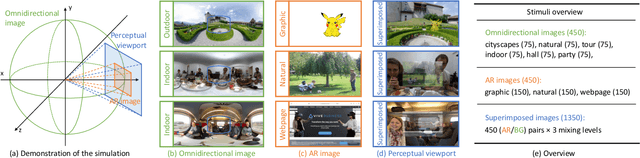

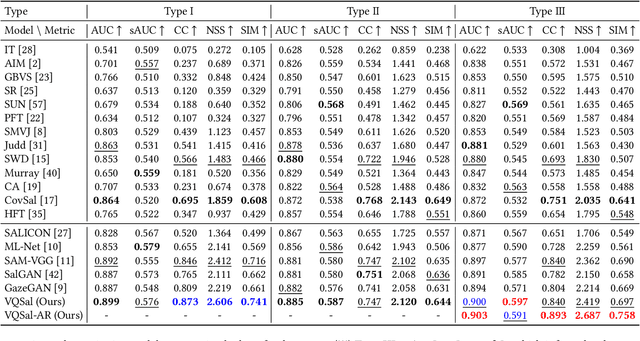

With the rapid development of multimedia technology, Augmented Reality (AR) has become a promising next-generation mobile platform. The primary theory underlying AR is human visual confusion, which allows users to perceive the real-world scenes and augmented contents (virtual-world scenes) simultaneously by superimposing them together. To achieve good Quality of Experience (QoE), it is important to understand the interaction between two scenarios, and harmoniously display AR contents. However, studies on how this superimposition will influence the human visual attention are lacking. Therefore, in this paper, we mainly analyze the interaction effect between background (BG) scenes and AR contents, and study the saliency prediction problem in AR. Specifically, we first construct a Saliency in AR Dataset (SARD), which contains 450 BG images, 450 AR images, as well as 1350 superimposed images generated by superimposing BG and AR images in pair with three mixing levels. A large-scale eye-tracking experiment among 60 subjects is conducted to collect eye movement data. To better predict the saliency in AR, we propose a vector quantized saliency prediction method and generalize it for AR saliency prediction. For comparison, three benchmark methods are proposed and evaluated together with our proposed method on our SARD. Experimental results demonstrate the superiority of our proposed method on both of the common saliency prediction problem and the AR saliency prediction problem over benchmark methods. Our data collection methodology, dataset, benchmark methods, and proposed saliency models will be publicly available to facilitate future research.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge