Robust Analysis of Multi-Task Learning on a Complex Vision System

Paper and Code

Feb 05, 2024

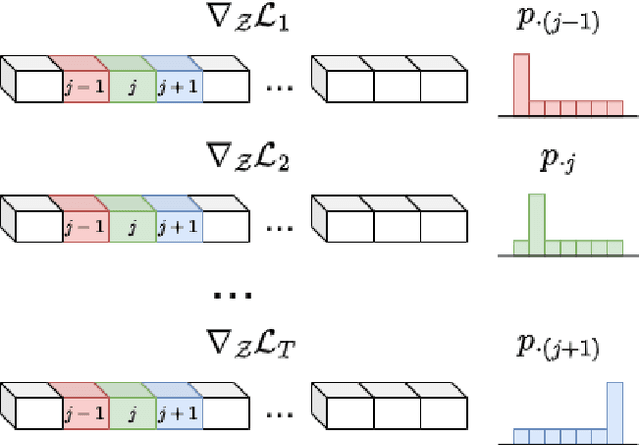

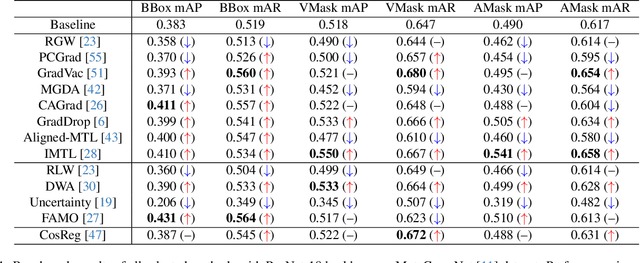

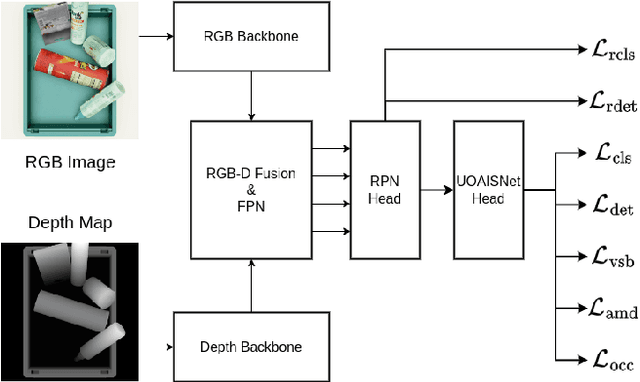

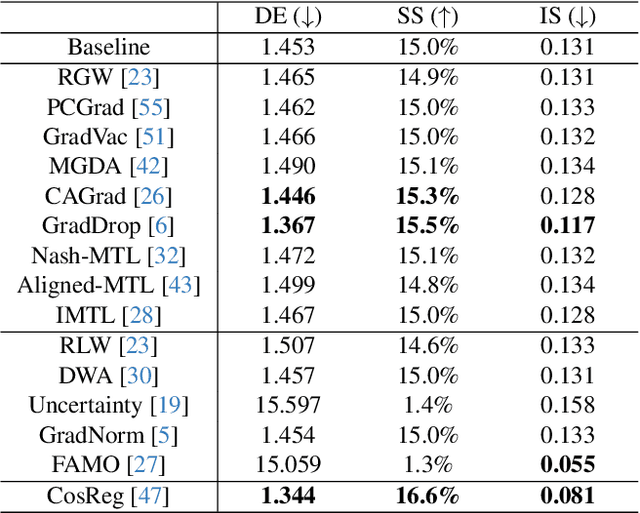

Multi-task learning (MTL) has been widely studied in the past decade. In particular, dozens of optimization algorithms have been proposed for different settings. While each of them claimed improvement when applied to certain models on certain datasets, there is still lack of deep understanding on the performance in complex real-worlds scenarios. We identify the gaps between research and application and make the following 4 contributions. (1) We comprehensively evaluate a large set of existing MTL optimization algorithms on the MetaGraspNet dataset designed for robotic grasping task, which is complex and has high real-world application values, and conclude the best-performing methods. (2) We empirically compare the method performance when applied on feature-level gradients versus parameter-level gradients over a large set of MTL optimization algorithms, and conclude that this feature-level gradients surrogate is reasonable when there are method-specific theoretical guarantee but not generalizable to all methods. (3) We provide insights on the problem of task interference and show that the existing perspectives of gradient angles and relative gradient norms do not precisely reflect the challenges of MTL, as the rankings of the methods based on these two indicators do not align well with those based on the test-set performance. (4) We provide a novel view of the task interference problem from the perspective of the latent space induced by the feature extractor and provide training monitoring results based on feature disentanglement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge