Rethinking the Importance of Sampling in Physics-informed Neural Networks

Paper and Code

Jul 05, 2022

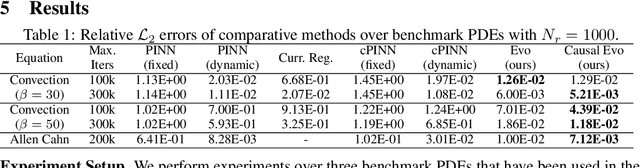

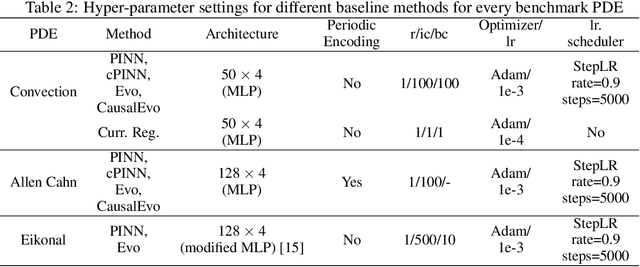

Physics-informed neural networks (PINNs) have emerged as a powerful tool for solving partial differential equations (PDEs) in a variety of domains. While previous research in PINNs has mainly focused on constructing and balancing loss functions during training to avoid poor minima, the effect of sampling collocation points on the performance of PINNs has largely been overlooked. In this work, we find that the performance of PINNs can vary significantly with different sampling strategies, and using a fixed set of collocation points can be quite detrimental to the convergence of PINNs to the correct solution. In particular, (1) we hypothesize that training of PINNs rely on successful "propagation" of solution from initial and/or boundary condition points to interior points, and PINNs with poor sampling strategies can get stuck at trivial solutions if there are \textit{propagation failures}. (2) We demonstrate that propagation failures are characterized by highly imbalanced PDE residual fields where very high residuals are observed over very narrow regions. (3) To mitigate propagation failure, we propose a novel \textit{evolutionary sampling} (Evo) method that can incrementally accumulate collocation points in regions of high PDE residuals. We further provide an extension of Evo to respect the principle of causality while solving time-dependent PDEs. We empirically demonstrate the efficacy and efficiency of our proposed methods in a variety of PDE problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge