Restrained Generative Adversarial Network against Overfitting in Numeric Data Augmentation

Paper and Code

Oct 26, 2020

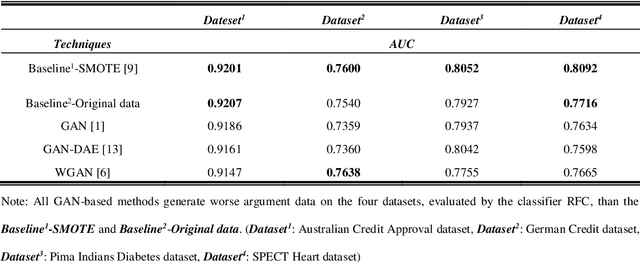

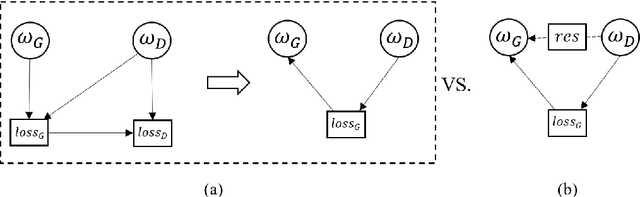

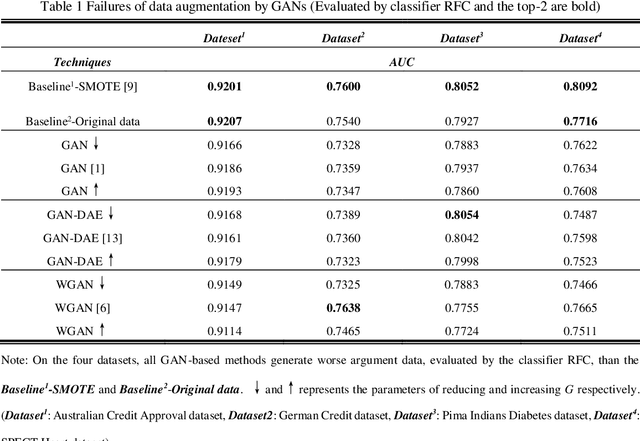

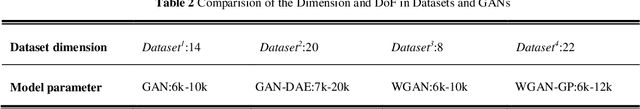

In recent studies, Generative Adversarial Network (GAN) is one of the popular schemes to augment the image dataset. However, in our study we find the generator G in the GAN fails to generate numerical data in lower-dimensional spaces, and we address overfitting in the generation. By analyzing the Directed Graphical Model (DGM), we propose a theoretical restraint, independence on the loss function, to suppress the overfitting. Practically, as the Statically Restrained GAN (SRGAN) and Dynamically Restrained GAN (DRGAN), two frameworks are proposed to employ the theoretical restraint to the network structure. In the static structure, we predefined a pair of particular network topologies of G and D as the restraint, and quantify such restraint by the interpretable metric Similarity of the Restraint (SR). While for DRGAN we design an adjustable dropout module for the restraint function. In the widely carried out 20 group experiments, on four public numerical class imbalance datasets and five classifiers, the static and dynamic methods together produce the best augmentation results of 19 from 20; and both two methods simultaneously generate 14 of 20 groups of the top-2 best, proving the effectiveness and feasibility of the theoretical restraints.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge