REGNet: REgion-based Grasp Network for Single-shot Grasp Detection in Point Clouds

Paper and Code

Mar 03, 2020

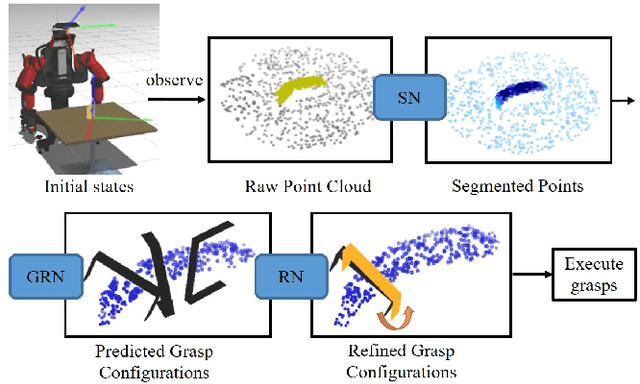

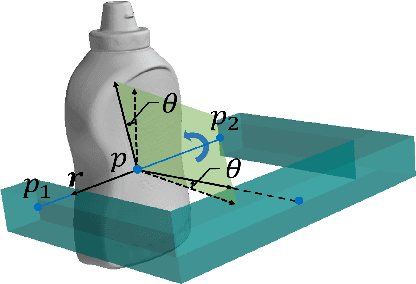

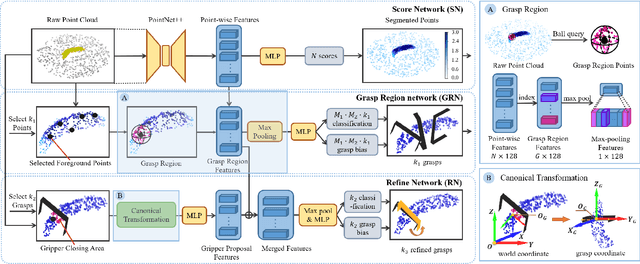

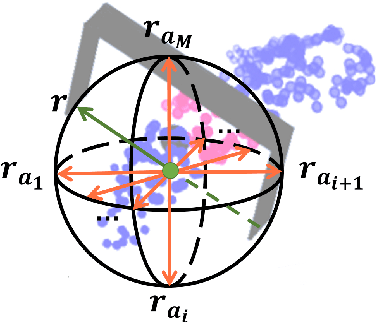

Learning a robust representation of robotic grasping from point clouds is a crucial but challenging task. In this paper, we propose an end-to-end single-shot grasp detection network taking one single-view point cloud as input for parallel grippers. Our network includes three stages: Score Network (SN), Grasp Region Network (GRN) and Refine Network (RN). Specifically, SN is designed to select positive points with high grasp confidence. GRN coarsely generates a set of grasp proposals on selected positive points. Finally, RN refines the detected grasps based on local grasp features. To further improve the performance, we propose a grasp anchor mechanism, in which grasp anchors are introduced to generate grasp proposal. Moreover, we contribute a large-scale grasp dataset without manual annotation based on the YCB dataset. Experiments show that our method significantly outperforms several successful point-cloud based grasp detection methods including GPD, PointnetGPD, as well as S$^4$G.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge