Reducing Visual Confusion with Discriminative Attention

Paper and Code

Nov 19, 2018

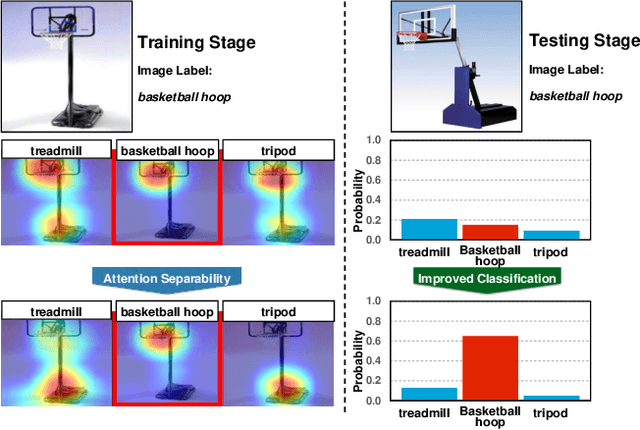

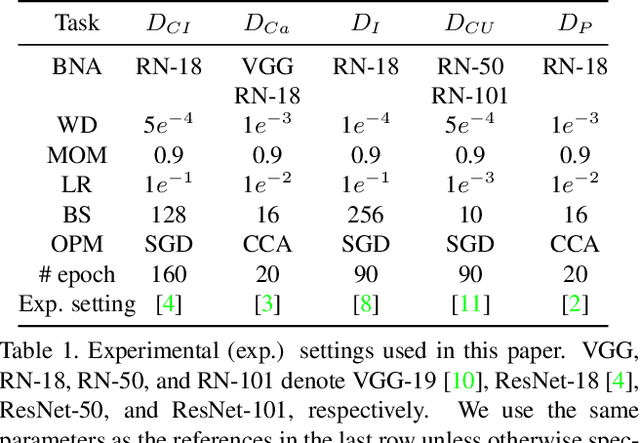

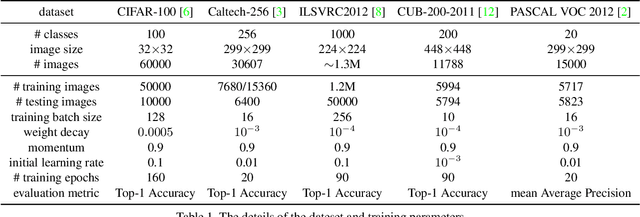

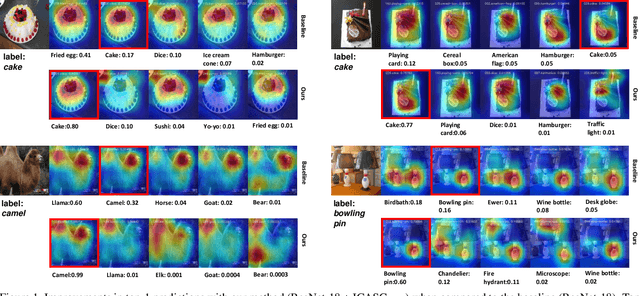

Recent developments in gradient-based attention modeling have led to improved model interpretability by means of class-specific attention maps. A key limitation, however, of these approaches is that the resulting attention maps, while being well localized, are not class discriminative. In this paper, we address this limitation with a new learning framework that makes class-discriminative attention and cross-layer attention consistency a principled and explicit part of the learning process. Furthermore, our framework provides attention guidance to the model in an end-to-end fashion, resulting in better discriminability and reduced visual confusion. We conduct extensive experiments on various image classification benchmarks with our proposed framework and demonstrate its efficacy by means of improved classification accuracy including CIFAR-100 (+3.46%), Caltech-256 (+1.64%), ImageNet (+0.92%), CUB-200-2011 (+4.8%) and PASCAL VOC2012 (+5.78%).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge