Reduce, Reuse, Recycle: Categories for Compositional Reinforcement Learning

Paper and Code

Aug 23, 2024

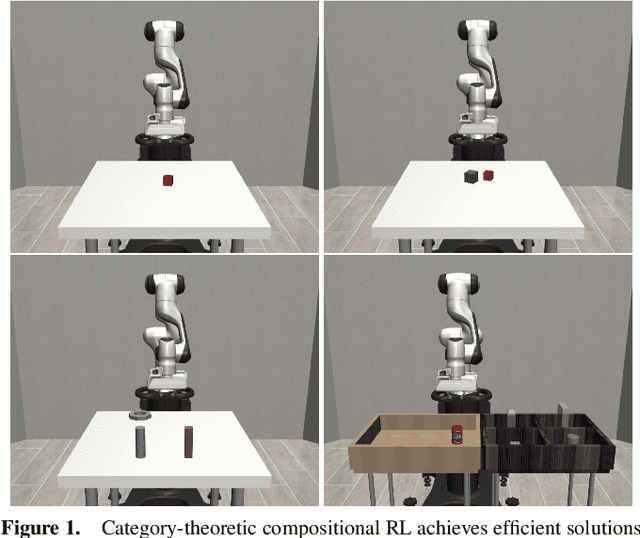

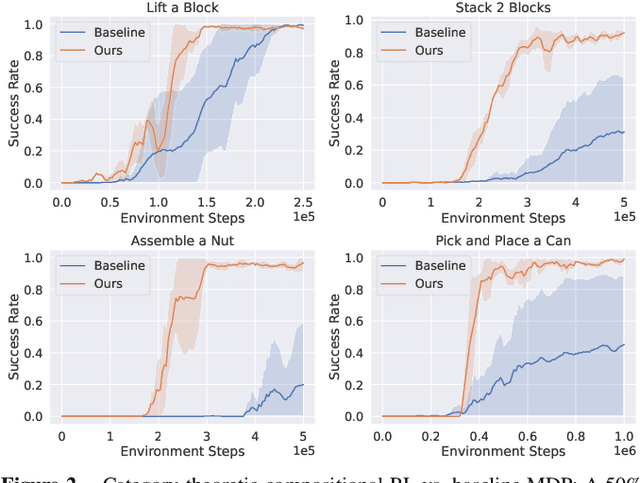

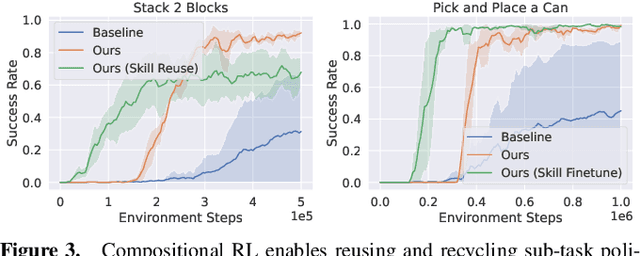

In reinforcement learning, conducting task composition by forming cohesive, executable sequences from multiple tasks remains challenging. However, the ability to (de)compose tasks is a linchpin in developing robotic systems capable of learning complex behaviors. Yet, compositional reinforcement learning is beset with difficulties, including the high dimensionality of the problem space, scarcity of rewards, and absence of system robustness after task composition. To surmount these challenges, we view task composition through the prism of category theory -- a mathematical discipline exploring structures and their compositional relationships. The categorical properties of Markov decision processes untangle complex tasks into manageable sub-tasks, allowing for strategical reduction of dimensionality, facilitating more tractable reward structures, and bolstering system robustness. Experimental results support the categorical theory of reinforcement learning by enabling skill reduction, reuse, and recycling when learning complex robotic arm tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge