Recursive Nested Filtering for Efficient Amortized Bayesian Experimental Design

Paper and Code

Sep 09, 2024

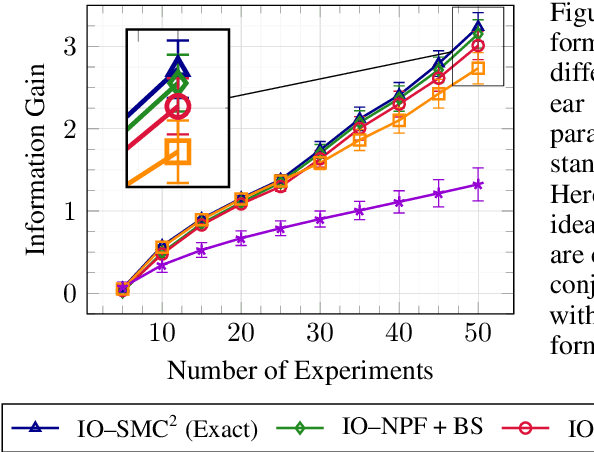

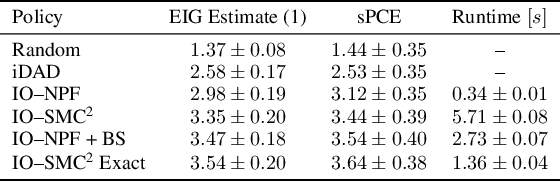

This paper introduces the Inside-Out Nested Particle Filter (IO-NPF), a novel, fully recursive, algorithm for amortized sequential Bayesian experimental design in the non-exchangeable setting. We frame policy optimization as maximum likelihood estimation in a non-Markovian state-space model, achieving (at most) $\mathcal{O}(T^2)$ computational complexity in the number of experiments. We provide theoretical convergence guarantees and introduce a backward sampling algorithm to reduce trajectory degeneracy. IO-NPF offers a practical, extensible, and provably consistent approach to sequential Bayesian experimental design, demonstrating improved efficiency over existing methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge