Proximal Alternating Direction Network: A Globally Converged Deep Unrolling Framework

Paper and Code

Dec 15, 2017

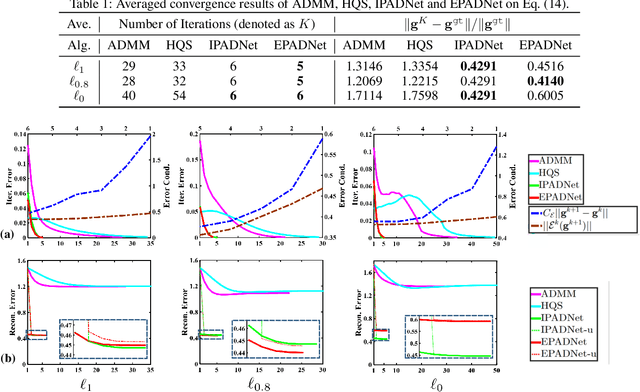

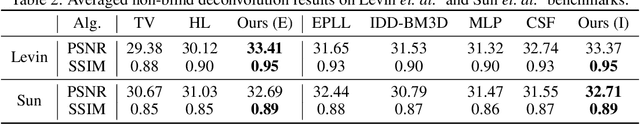

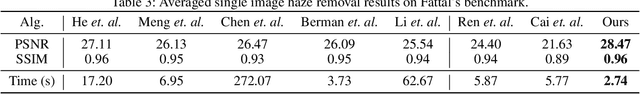

Deep learning models have gained great success in many real-world applications. However, most existing networks are typically designed in heuristic manners, thus lack of rigorous mathematical principles and derivations. Several recent studies build deep structures by unrolling a particular optimization model that involves task information. Unfortunately, due to the dynamic nature of network parameters, their resultant deep propagation networks do \emph{not} possess the nice convergence property as the original optimization scheme does. This paper provides a novel proximal unrolling framework to establish deep models by integrating experimentally verified network architectures and rich cues of the tasks. More importantly, we \emph{prove in theory} that 1) the propagation generated by our unrolled deep model globally converges to a critical-point of a given variational energy, and 2) the proposed framework is still able to learn priors from training data to generate a convergent propagation even when task information is only partially available. Indeed, these theoretical results are the best we can ask for, unless stronger assumptions are enforced. Extensive experiments on various real-world applications verify the theoretical convergence and demonstrate the effectiveness of designed deep models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge