Probabilistic Semantic Mapping for Urban Autonomous Driving Applications

Paper and Code

Jun 08, 2020

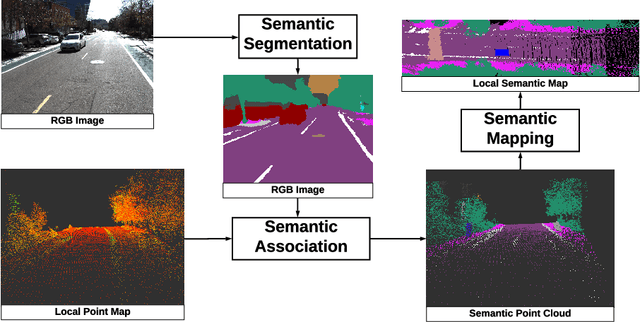

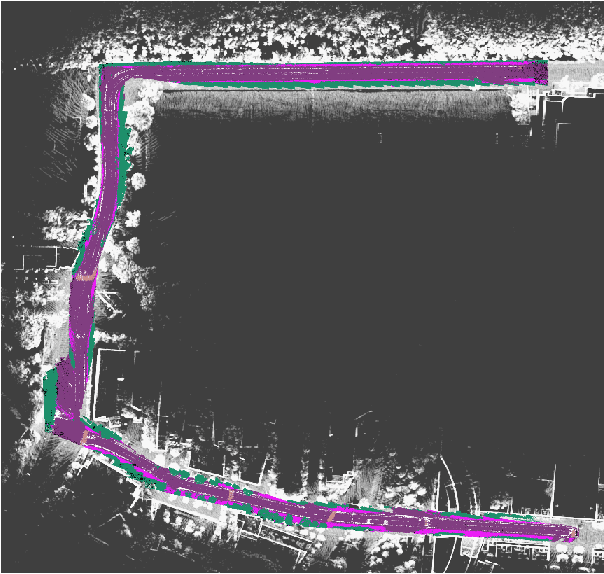

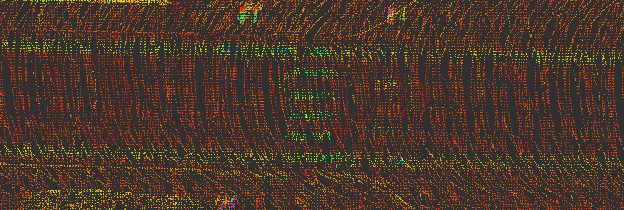

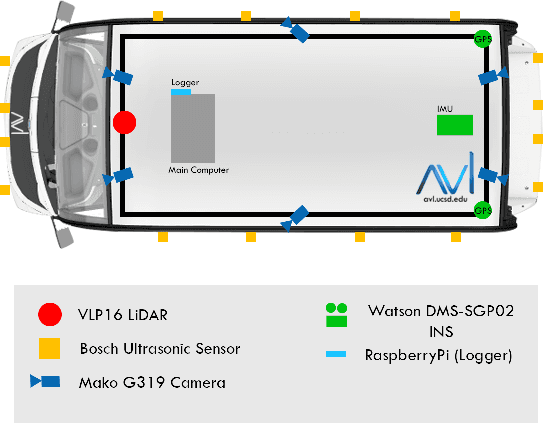

Recent advancement in statistical learning and computational ability has enabled autonomous vehicle technology to develop at a much faster rate and become widely adopted. While many of the architectures previously introduced are capable of operating under highly dynamic environments, many of these are constrained to smaller-scale deployments and require constant maintenance due to the associated scalability cost with high-definition (HD) maps. HD maps provide critical information for self-driving cars to drive safely. However, traditional approaches for creating HD maps involves tedious manual labeling. As an attempt to tackle this problem, we fuse 2D image semantic segmentation with pre-built point cloud maps collected from a relatively inexpensive 16 channel LiDAR sensor to construct a local probabilistic semantic map in bird's eye view that encodes static landmarks such as roads, sidewalks, crosswalks, and lanes in the driving environment. Experiments from data collected in an urban environment show that this model can be extended for automatically incorporating road features into HD maps with potential future work directions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge