Private Counterfactual Retrieval

Paper and Code

Oct 17, 2024

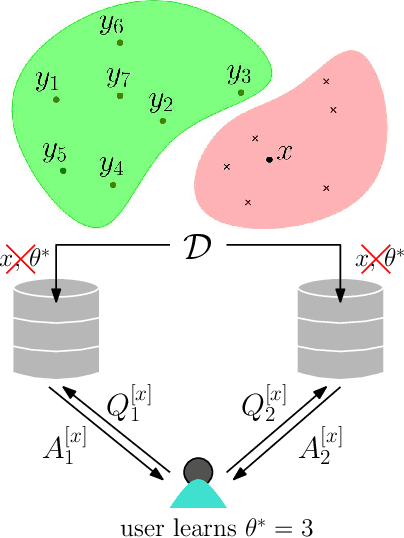

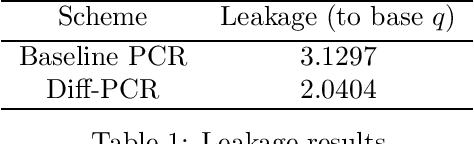

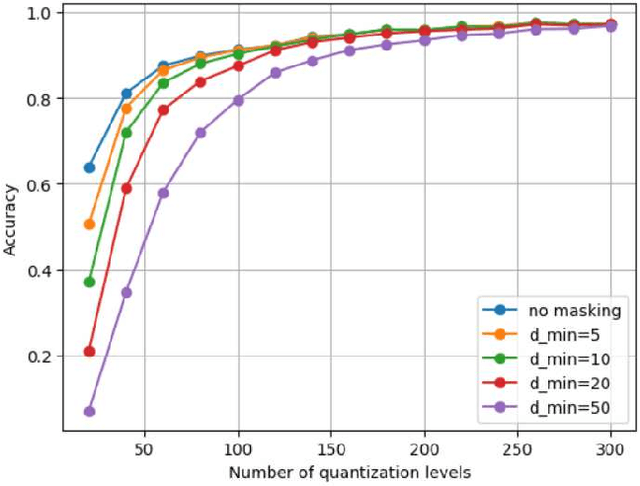

Transparency and explainability are two extremely important aspects to be considered when employing black-box machine learning models in high-stake applications. Providing counterfactual explanations is one way of catering this requirement. However, this also poses a threat to the privacy of both the institution that is providing the explanation as well as the user who is requesting it. In this work, we propose multiple schemes inspired by private information retrieval (PIR) techniques which ensure the \emph{user's privacy} when retrieving counterfactual explanations. We present a scheme which retrieves the \emph{exact} nearest neighbor counterfactual explanation from a database of accepted points while achieving perfect (information-theoretic) privacy for the user. While the scheme achieves perfect privacy for the user, some leakage on the database is inevitable which we quantify using a mutual information based metric. Furthermore, we propose strategies to reduce this leakage to achieve an advanced degree of database privacy. We extend these schemes to incorporate user's preference on transforming their attributes, so that a more actionable explanation can be received. Since our schemes rely on finite field arithmetic, we empirically validate our schemes on real datasets to understand the trade-off between the accuracy and the finite field sizes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge