Privacy-Preserving Low-Rank Adaptation for Latent Diffusion Models

Paper and Code

Feb 19, 2024

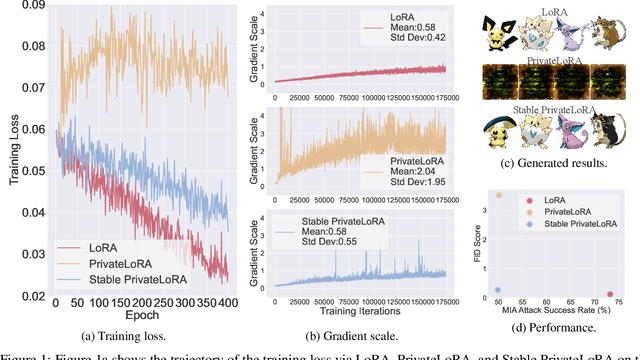

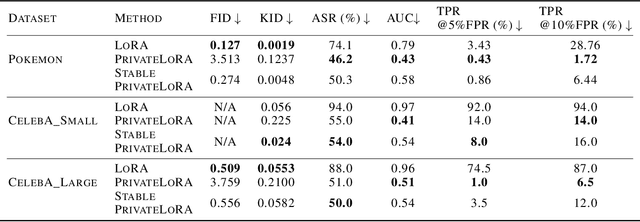

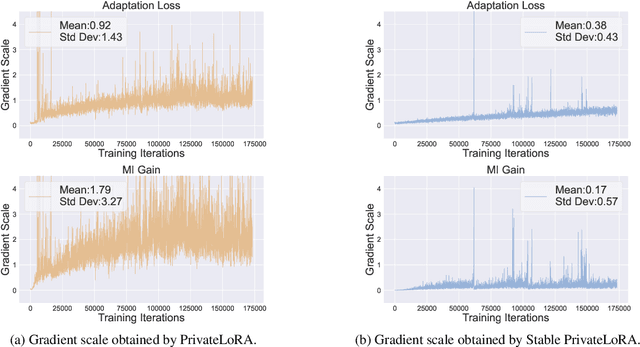

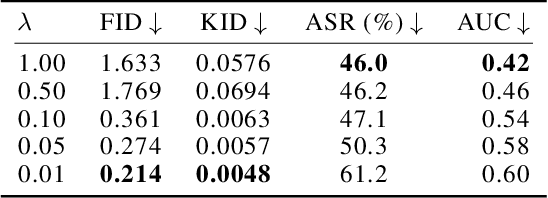

Low-rank adaptation (LoRA) is an efficient strategy for adapting latent diffusion models (LDMs) on a training dataset to generate specific objects by minimizing the adaptation loss. However, adapted LDMs via LoRA are vulnerable to membership inference (MI) attacks that can judge whether a particular data point belongs to private training datasets, thus facing severe risks of privacy leakage. To defend against MI attacks, we make the first effort to propose a straightforward solution: privacy-preserving LoRA (PrivateLoRA). PrivateLoRA is formulated as a min-max optimization problem where a proxy attack model is trained by maximizing its MI gain while the LDM is adapted by minimizing the sum of the adaptation loss and the proxy attack model's MI gain. However, we empirically disclose that PrivateLoRA has the issue of unstable optimization due to the large fluctuation of the gradient scale which impedes adaptation. To mitigate this issue, we propose Stable PrivateLoRA that adapts the LDM by minimizing the ratio of the adaptation loss to the MI gain, which implicitly rescales the gradient and thus stabilizes the optimization. Our comprehensive empirical results corroborate that adapted LDMs via Stable PrivateLoRA can effectively defend against MI attacks while generating high-quality images. Our code is available at https://github.com/WilliamLUO0/StablePrivateLoRA.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge