Prior-guided Bayesian Optimization

Paper and Code

Jun 25, 2020

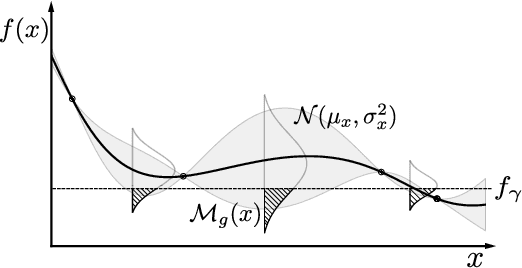

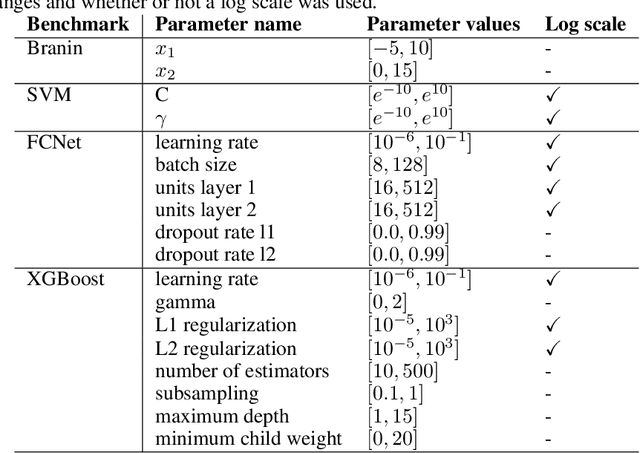

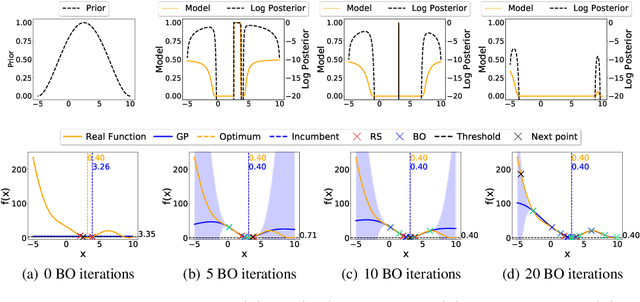

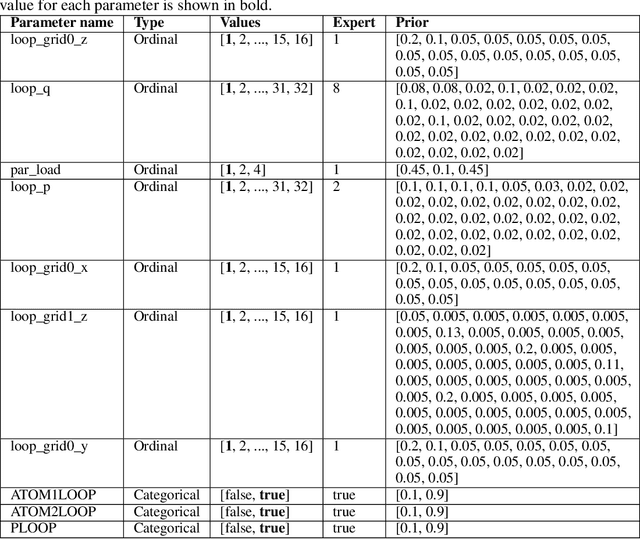

While Bayesian Optimization (BO) is a very popular method for optimizing expensive black-box functions, it fails to leverage the experience of domain experts. This causes BO to waste function evaluations on commonly known bad regions of design choices, e.g., hyperparameters of a machine learning algorithm. To address this issue, we introduce Prior-guided Bayesian Optimization (PrBO). PrBO allows users to inject their knowledge into the optimization process in the form of priors about which parts of the input space will yield the best performance, rather than BO's standard priors over functions which are much less intuitive for users. PrBO then combines these priors with BO's standard probabilistic model to yield a posterior. We show that PrBO is more sample efficient than state-of-the-art methods without user priors and 10,000$\times$ faster than random search, on a common suite of benchmarks and a real-world hardware design application. We also show that PrBO converges faster even if the user priors are not entirely accurate and that it robustly recovers from misleading priors.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge