Precise Repositioning of Robotic Ultrasound: Improving Registration-based Motion Compensation using Ultrasound Confidence Optimization

Paper and Code

Aug 10, 2022

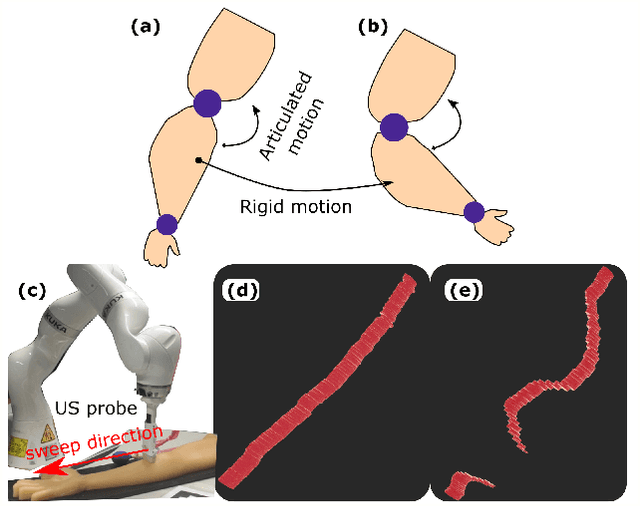

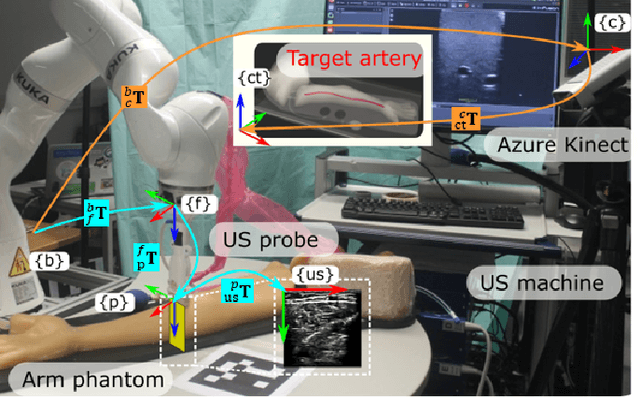

Robotic ultrasound (US) imaging has been seen as a promising solution to overcome the limitations of free-hand US examinations, i.e., inter-operator variability. \revision{However, the fact that robotic US systems cannot react to subject movements during scans limits their clinical acceptance.} Regarding human sonographers, they often react to patient movements by repositioning the probe or even restarting the acquisition, in particular for the scans of anatomies with long structures like limb arteries. To realize this characteristic, we proposed a vision-based system to monitor the subject's movement and automatically update the scan trajectory thus seamlessly obtaining a complete 3D image of the target anatomy. The motion monitoring module is developed using the segmented object masks from RGB images. Once the subject is moved, the robot will stop and recompute a suitable trajectory by registering the surface point clouds of the object obtained before and after the movement using the iterative closest point algorithm. Afterward, to ensure optimal contact conditions after repositioning US probe, a confidence-based fine-tuning process is used to avoid potential gaps between the probe and contact surface. Finally, the whole system is validated on a human-like arm phantom with an uneven surface, while the object segmentation network is also validated on volunteers. The results demonstrate that the presented system can react to object movements and reliably provide accurate 3D images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge