Practical and Robust Privacy Amplification with Multi-Party Differential Privacy

Paper and Code

Aug 30, 2019

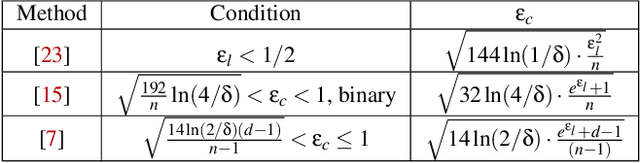

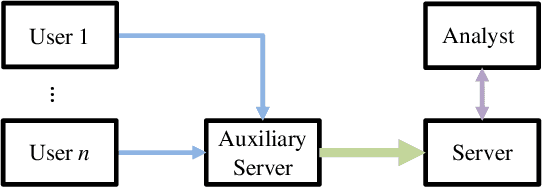

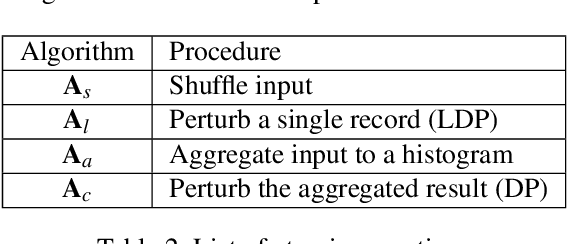

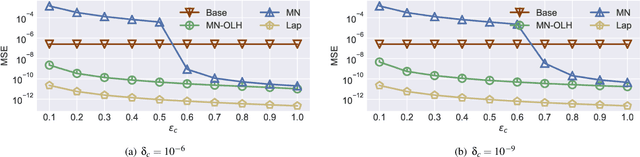

When collecting information, local differential privacy (LDP) alleviates privacy concerns of users, as users' private information is randomized before being sent to the central aggregator. However, LDP results in loss of utility due to the amount of noise that is added. To address this issue, recent work introduced an intermediate server and with the assumption that this intermediate server did not collude with the aggregator. Using this trust model, one can add less noise to achieve the same privacy guarantee; thus improving the utility. In this paper, we investigate this multiple-party setting of LDP. We first analyze the threat model and identify potential adversaries. We then make observations about existing approaches and propose new techniques that achieve a better privacy-utility tradeoff than existing ones. Finally, we perform experiments to compare different methods and demonstrate the benefits of using our proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge